This article is sponsored by AWS. Huge thanks for helping me produce more .NET on AWS content!

You have probably lived the “just add one more subscriber” story—an orders API starts simple, then marketing wants emails, analytics wants events, fulfillment wants packing slips, and suddenly everything is tightly coupled. Every new requirement means touching the API and risking regressions. The fan-out pattern solves this: publish once, let many consumers react on their own timelines.

When an order is placed, we want to send confirmations, notify fulfillment, and record analytics without cross-wiring services. In this walkthrough we will keep things practical: use Terraform just enough to create an SNS topic and two SQS queues (each with its own DLQ), and then wire a .NET 10 orders API to publish while two worker services consume. The goal is a repeatable pattern you can drop into real workloads, not a sprawling IaC tutorial.

If you want to skip straight to code, the full repo is here: https://github.com/iammukeshm/fanout-pattern-sns-sqs.

Before you start

If you want background on SNS vs SQS or messaging basics, these reads will help:

You can clone this walkthrough’s source (Terraform + .NET producer/consumers) from https://github.com/iammukeshm/fanout-pattern-sns-sqs and adapt the outputs to your AWS account.

What we’re building

An SNS topic that receives order events, two SQS queues subscribed to that topic (notifications and fulfillment) with their own DLQs, a lightweight Terraform layout to provision and output ARNs/URLs, and three .NET 10 apps: a Web API that publishes orders, plus two worker services that poll their queues independently. We will test end-to-end with curl/Postman and the AWS console, then tear everything down with terraform destroy to keep costs in check.

Prerequisites

Terraform CLI, an AWS account with credentials (aws configure or env vars), a .NET 10 SDK, curl or Postman, and an S3 bucket name for Terraform state. If you are new to S3 backends, create a bucket first (for example my-tf-state-bucket) so Terraform can store state centrally.

Fan-out pattern in a nutshell

SNS fans out a single publish to multiple subscriptions. Each SQS queue becomes its own channel with independent retry, backoff, and scaling. In this pattern the orders API never talks to queues directly—it just publishes one JSON message to SNS. The notification consumer handles email/SMS, while the fulfillment consumer handles packing and shipping steps. Because each queue has its own DLQ and visibility timeout, a failure in one consumer does not block the other.

Picture the flow: the API emits an OrderCreated event; SNS receives it and immediately pushes it to both queues. The notifications worker pulls from orders-notifications with long polling, sends an email, then deletes the message. The fulfillment worker pulls from orders-fulfillment, calls warehouse systems, then deletes its copy. If fulfillment fails, only that queue retries and eventually sends the message to its DLQ; notifications still succeeded. That is the core win of fan-out: isolation of work streams with one publish.

Message shape matters. Keep the event schema small and clear: orderId, amount, customerEmail, items, maybe a createdAt timestamp. Treat it as a contract—if you add fields, do so in a backward-compatible way. Consumers should be resilient to missing optional fields so you can roll out changes without breaking older workers.

IAM is straightforward here: the API needs permission to publish to the topic, and the workers need permission to receive/delete from their queues. If you deploy to App Runner, Lambda, or ECS later, attach these permissions to the task/role. For local development, your default AWS profile usually provides access; in production, prefer least-privilege policies scoped to the specific topic and queues.

Light Terraform layout

We will keep the infrastructure slim: one module for the topic, one for queues, and a dev stack that wires them together. The layout mirrors the modular approach from earlier posts but sticks to the essentials.

fanout-terraform/ modules/ sns-topic/ main.tf variables.tf outputs.tf sqs-queue/ main.tf variables.tf outputs.tf envs/ dev/ backend.tf main.tf outputs.tfSNS module (minimal)

modules/sns-topic/variables.tf

variable "name" { type = string description = "Topic name"}

variable "tags" { type = map(string) description = "Tags to apply" default = {}}modules/sns-topic/main.tf

resource "aws_sns_topic" "this" { name = var.name tags = var.tags}This module is intentionally tiny: it creates one topic and tags it. No policies are set here—SNS defaults are enough for this demo. The outputs expose the ARN and name so the root stack (and your .NET API) never hardcode values.

modules/sns-topic/outputs.tf

output "arn" { value = aws_sns_topic.this.arn}

output "name" { value = aws_sns_topic.this.name}SQS module (queue + DLQ)

modules/sqs-queue/variables.tf

variable "name" { type = string description = "Queue name"}

variable "visibility_timeout_seconds" { type = number default = 30 description = "Visibility timeout for the main queue"}

variable "tags" { type = map(string) default = {}}

variable "allowed_sns_arns" { type = list(string) default = [] description = "SNS topic ARNs allowed to send to this queue"}modules/sqs-queue/main.tf

resource "aws_sqs_queue" "dlq" { name = "${var.name}-dlq" tags = var.tags}

resource "aws_sqs_queue" "this" { name = var.name visibility_timeout_seconds = var.visibility_timeout_seconds redrive_policy = jsonencode({ deadLetterTargetArn = aws_sqs_queue.dlq.arn, maxReceiveCount = 5 }) message_retention_seconds = 1209600 receive_wait_time_seconds = 10 tags = var.tags}

data "aws_iam_policy_document" "sns_to_sqs" { count = length(var.allowed_sns_arns) > 0 ? 1 : 0

statement { actions = ["sqs:SendMessage"] resources = [aws_sqs_queue.this.arn] principals { type = "Service" identifiers = ["sns.amazonaws.com"] } condition { test = "ArnEquals" variable = "aws:SourceArn" values = var.allowed_sns_arns } }}

resource "aws_sqs_queue_policy" "allow_sns" { count = length(var.allowed_sns_arns) > 0 ? 1 : 0

queue_url = aws_sqs_queue.this.id policy = data.aws_iam_policy_document.sns_to_sqs[0].json}Here the DLQ is created first, then the main queue references it in redrive_policy so failed messages go to the DLQ after 5 receives. receive_wait_time_seconds = 10 enables long polling by default. Adjust visibility_timeout_seconds to be longer than your longest handler runtime. The policy block is crucial: it allows only the listed SNS topics to send to this queue; without it, SNS deliveries will fail or get wrapped. We also set raw_message_delivery = true on subscriptions so SQS receives just the message body (no SNS envelope), which keeps deserialization simple.

modules/sqs-queue/outputs.tf

output "url" { value = aws_sqs_queue.this.id}

output "arn" { value = aws_sqs_queue.this.arn}

output "dlq_url" { value = aws_sqs_queue.dlq.id}

output "dlq_arn" { value = aws_sqs_queue.dlq.arn}These outputs surface the URLs/ARNs you pass into subscriptions and app settings—no hardcoded queue names in code or CI/CD. Use url for consumers (SQS client needs the URL), arn for SNS subscriptions, and dlq_* for monitoring or alarms.

Wiring the fan-out (dev stack)

envs/dev/backend.tf

terraform { backend "s3" { bucket = "my-tf-state-bucket" key = "fanout/dev/terraform.tfstate" region = "us-east-1" }}envs/dev/main.tf

terraform { required_version = ">= 1.7.0" required_providers { aws = { source = "hashicorp/aws" version = "~> 5.40" } }}

provider "aws" { region = "us-east-1"}

locals { tags = { Project = "OrdersFanout" Env = "dev" }}

module "orders_topic" { source = "../../modules/sns-topic" name = "orders-events" tags = local.tags}

module "notifications_queue" { source = "../../modules/sqs-queue" name = "orders-notifications" tags = local.tags allowed_sns_arns = [module.orders_topic.arn]}

module "fulfillment_queue" { source = "../../modules/sqs-queue" name = "orders-fulfillment" tags = local.tags allowed_sns_arns = [module.orders_topic.arn]}

resource "aws_sns_topic_subscription" "notifications" { topic_arn = module.orders_topic.arn protocol = "sqs" endpoint = module.notifications_queue.arn raw_message_delivery = true}

resource "aws_sns_topic_subscription" "fulfillment" { topic_arn = module.orders_topic.arn protocol = "sqs" endpoint = module.fulfillment_queue.arn raw_message_delivery = true}This root stack pins provider versions, sets shared tags, creates one topic and two queues, and wires two subscriptions—one to each queue. That wiring is the fan-out: a single publish goes to both endpoints. Outputs below capture the topic ARN and queue URLs for your apps.

If you prefer to keep subscriptions closer to the queues, you could move them into a dedicated module, but keeping them here makes the fan-out wiring explicit. The locals block standardizes tags across resources; extend it with Owner or CostCenter if your org requires them.

envs/dev/outputs.tf

output "orders_topic_arn" { value = module.orders_topic.arn}

output "notifications_queue_url" { value = module.notifications_queue.url}

output "fulfillment_queue_url" { value = module.fulfillment_queue.url}Before running, replace the S3 bucket in the /envs/dev/backend.tf name with yours, and set your AWS region if needed. Apply with terraform fmt, terraform init, terraform validate, terraform plan, then terraform apply. After apply, grab the outputs—you will pass the topic ARN to the producer API and the queue URLs to the consumers.

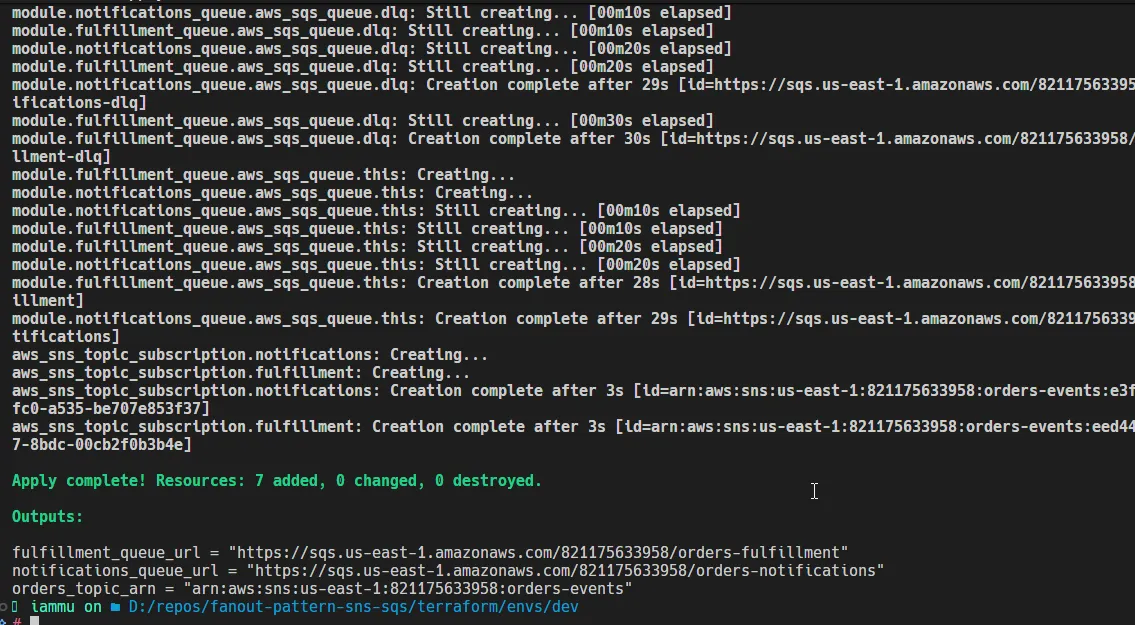

What each piece does: the SNS module creates a single topic and exports its ARN. The SQS module creates a main queue plus a DLQ and sets a redrive policy (maxReceiveCount = 5) so bad messages go to the DLQ instead of looping forever. It also sets receive_wait_time_seconds = 10 to enable long polling by default. In the root stack, we instantiate one topic and two queues, then create two subscriptions that point the topic to each queue—this is the fan-out wiring. Outputs surface the topic ARN and queue URLs so your apps never hardcode them. The backend in backend.tf keeps state in S3; change the bucket/key/region to your own before running init.

If you want to route only certain events to a queue, add a filter policy to the subscription:

resource "aws_sns_topic_subscription" "notifications" { topic_arn = module.orders_topic.arn protocol = "sqs" endpoint = module.notifications_queue.arn filter_policy = jsonencode({ eventType = ["OrderCreated"] })}Filters keep queues focused. You can later add OrderCancelled and subscribe only the fulfillment queue, leaving notifications untouched.

Mapping outputs to app settings is where the infra meets code. Take orders_topic_arn from terraform output and paste it into Messaging:OrdersTopicArn for the API (or inject it via environment variables in your deployment pipeline). Do the same for notifications_queue_url and fulfillment_queue_url in the worker configs. If you use CI/CD, you can export these outputs as pipeline variables so you never hardcode ARNs or URLs in code or YAML.

The above is the result of terraform apply—capture these outputs for your .NET apps. You will need orders_topic_arn for the producer API and both queue URLs for the consumers. In the next sections, we will build minimal .NET 10 apps that wire to this infrastructure. Within the appsettings of each app, popupate the relevant values from these outputs, or better yet, pass them as environment variables in your CI/CD pipelines to avoid hardcoding (in production). For this demo, you can keep them in appsettings.json.

.NET 10 Producer Web API (Orders API)

Create a minimal ASP.NET Core Web API (dotnet new webapi) and add the AWS SDK for SNS:

dotnet add package AWSSDK.SimpleNotificationService --version 3.7.400appsettings.json (or use environment variables in production):

{ "AWS": { "Region": "us-east-1" }, "Messaging": { "OrdersTopicArn": "arn:aws:sns:us-east-1:123456789012:orders-events" }}Fill OrdersTopicArn with terraform output orders_topic_arn. In pipelines, export that output and inject it as Messaging__OrdersTopicArn so you never hardcode ARNs in code or config files.

Program.cs (minimal API)

using Amazon;using Amazon.SimpleNotificationService;using Amazon.SimpleNotificationService.Model;using System.Text.Json;

var builder = WebApplication.CreateBuilder(args);

var awsRegion = builder.Configuration["AWS:Region"] ?? "us-east-1";builder.Services.AddSingleton<IAmazonSimpleNotificationService>(_ => new AmazonSimpleNotificationServiceClient(RegionEndpoint.GetBySystemName(awsRegion)));

var app = builder.Build();

app.MapPost("/api/orders", async (OrderCreated request, IAmazonSimpleNotificationService sns, IConfiguration config) =>{ var topicArn = config["Messaging:OrdersTopicArn"] ?? throw new InvalidOperationException("Topic ARN missing");

var json = JsonSerializer.Serialize(request); var response = await sns.PublishAsync(new PublishRequest { TopicArn = topicArn, Message = json, MessageAttributes = new Dictionary<string, MessageAttributeValue> { { "eventType", new MessageAttributeValue { DataType = "String", StringValue = "OrderCreated" } } } });

return Results.Ok(new { MessageId = response.MessageId });});

app.Run();

public record OrderCreated(string OrderId, decimal Amount, string CustomerEmail, string[] Items);This minimal API publishes the order payload to SNS with a simple attribute (eventType) you can later use for filters. It pulls the topic ARN from configuration, so wiring Terraform outputs into app settings (or env vars) is all you need to connect infrastructure and code.

Running locally: set AWS__Region and Messaging__OrdersTopicArn as environment variables if you prefer not to keep values in appsettings.json. The AWS SDK picks up credentials from your shared profile; in CI/CD you can rely on the runner role. Keep the controller thin—validation, publishing, and maybe idempotency (dedup by orderId if you do upstream retries) are enough for this demo.

.NET 10 consumers (two workers)

Create two worker services (notifications and fulfillment) with the worker template:

dotnet new worker -n Orders.Notifications.Workerdotnet new worker -n Orders.Fulfillment.Workerdotnet add Orders.Notifications.Worker package AWSSDK.SQS --version 3.7.400dotnet add Orders.Fulfillment.Worker package AWSSDK.SQS --version 3.7.400Each worker gets its own queue URL from configuration. Example for notifications:

appsettings.json

{ "AWS": { "Region": "us-east-1" }, "Queues": { "OrdersNotifications": "https://sqs.us-east-1.amazonaws.com/123456789012/orders-notifications" }}Populate OrdersNotifications with terraform output notifications_queue_url. In CI/CD, pass it as Queues__OrdersNotifications via environment variables to avoid editing files.

Program.cs (notifications)

using Amazon;using Amazon.SQS;using Amazon.SQS.Model;using Microsoft.Extensions.Hosting;using Microsoft.Extensions.Logging;using System.Text.Json;

var builder = Host.CreateApplicationBuilder(args);var region = builder.Configuration["AWS:Region"] ?? "us-east-1";builder.Services.AddSingleton<IAmazonSQS>(_ => new AmazonSQSClient(RegionEndpoint.GetBySystemName(region)));builder.Services.AddHostedService<NotificationsWorker>();builder.Services.AddLogging(cfg => cfg.AddConsole());var app = builder.Build();await app.RunAsync();

public class NotificationsWorker : BackgroundService{ private readonly IAmazonSQS _sqs; private readonly ILogger<NotificationsWorker> _logger; private readonly string _queueUrl; private readonly JsonSerializerOptions _jsonOptions = new(JsonSerializerDefaults.Web) { PropertyNameCaseInsensitive = true };

public NotificationsWorker(IAmazonSQS sqs, ILogger<NotificationsWorker> logger, IConfiguration cfg) { _sqs = sqs; _logger = logger; _queueUrl = cfg["Queues:OrdersNotifications"] ?? throw new InvalidOperationException("Queue URL missing"); }

protected override async Task ExecuteAsync(CancellationToken stoppingToken) { while (!stoppingToken.IsCancellationRequested) { var messages = await _sqs.ReceiveMessageAsync(new ReceiveMessageRequest { QueueUrl = _queueUrl, MaxNumberOfMessages = 5, WaitTimeSeconds = 10 }, stoppingToken);

foreach (var message in messages.Messages) { try { var order = JsonSerializer.Deserialize<OrderCreated>(message.Body, _jsonOptions); _logger.LogInformation("Notify customer {Email} for order {OrderId}", order?.CustomerEmail, order?.OrderId ?? "unknown"); await _sqs.DeleteMessageAsync(_queueUrl, message.ReceiptHandle, stoppingToken); } catch (Exception ex) { _logger.LogError(ex, "Failed to process message {Id}", message.MessageId); // letting visibility timeout expire will push to DLQ after maxReceiveCount } } } }}

public record OrderCreated(string OrderId, decimal Amount, string CustomerEmail, string[] Items);The fulfillment worker is identical except it reads Queues:OrdersFulfillment and logs or calls fulfillment logic. Keep them as separate services so each can scale and fail independently.

Tuning the consumers: WaitTimeSeconds = 10 enables long polling, cutting empty responses and cost. MaxNumberOfMessages = 5 is a good start; increase it if you batch work. Keep visibility_timeout_seconds in Terraform longer than the longest processing time, otherwise messages can be retried while still in-flight. If you expect spikes, run multiple instances of a worker and let SQS distribute messages fairly.

You can run the workers as containers (ECS/App Runner) or services on a VM; the code does not change. For containers, pass queue URLs and regions as environment variables; rely on the task role for SQS permissions rather than embedding keys. For App Runner, attach an IAM role with sqs:ReceiveMessage, sqs:DeleteMessage, and sqs:GetQueueAttributes on the specific queue ARNs.

Testing end to end

- Apply Terraform, then capture outputs:

cd ./terraform/envs/devterraform fmtterraform initterraform validateterraform planterraform applyterraform output orders_topic_arnterraform output notifications_queue_urlterraform output fulfillment_queue_urlI have attached a screenshot of the terraform apply output earlier.

- Update the producer appsettings with the topic ARN; update each worker with its queue URL. Run all three apps locally (or containerize them if you prefer).

- Publish an order (Postman/body example):

{ "orderId": "ORD-1001", "amount": 149.99, "items": ["book", "mug"]}Send this JSON to POST https://localhost:5001/api/orders with Content-Type: application/json.

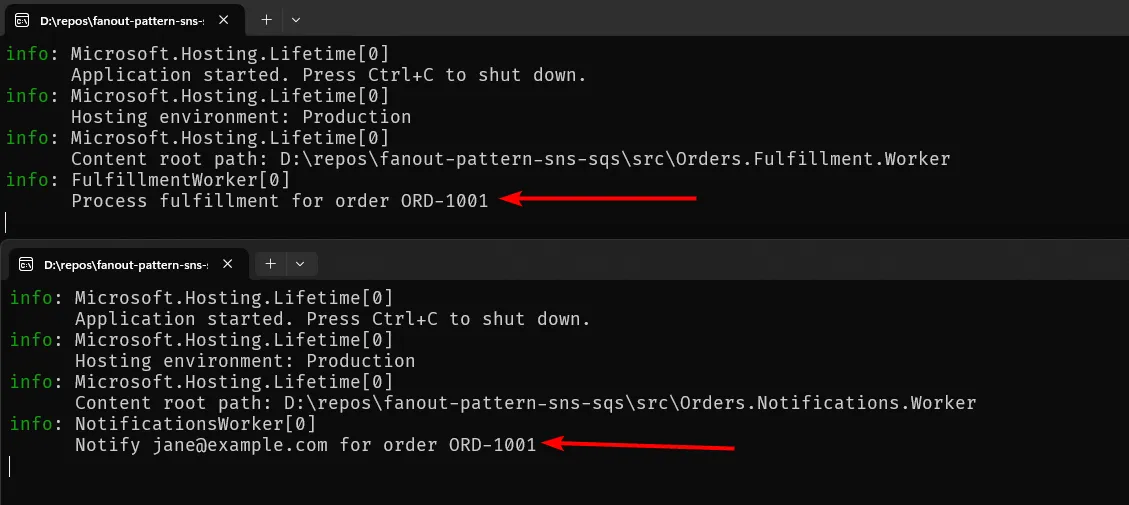

- Watch logs from both workers. Each should receive the same message and log its action. In the AWS console you can also poll the queues or check CloudWatch logs if you are shipping logs there.

- Try a failure scenario by throwing inside one worker to see the message move to its DLQ after retries, while the other worker continues normally.

As you can see above, both workers log processing the same order independently. You can verify in the AWS SQS console that each queue received the message from SNS. If you simulate a failure in one worker (e.g., throw an exception), that queue will retry and eventually send the message to its DLQ after exceeding maxReceiveCount, while the other worker continues processing normally. This demonstrates the isolation and resilience of the fan-out pattern.

Fan-out tips and gotchas

Keep visibility timeouts long enough to finish work; set DLQs per queue to isolate failures; use message attributes to add filter policies later if you add more subscribers; and avoid sharing a single queue between unrelated consumers. For higher throughput, increase MaxNumberOfMessages and use parallel processing inside the worker, but always delete messages only after successful handling. If you see duplicate deliveries (rare but possible), make handlers idempotent—check if you already sent an email or processed a shipment before acting. Encrypt queues and topics with AWS-managed keys by default; for stricter environments, use customer-managed KMS keys and tighten IAM to those ARNs.

Observability matters even in a demo. Add simple logging of message IDs and order IDs; later you can attach CloudWatch metrics and alarms for ApproximateNumberOfMessagesVisible on each queue and DLQ. If you adopt X-Ray or OpenTelemetry, propagate trace IDs in message attributes so consumers can link work back to the original request.

Security hygiene: scope IAM to the exact topic and queues. An example policy for the API role looks like:

{ "Version": "2012-10-17", "Statement": [ { "Effect": "Allow", "Action": ["sns:Publish"], "Resource": "arn:aws:sns:us-east-1:123456789012:orders-events" } ]}Consumers need sqs:ReceiveMessage, sqs:DeleteMessage, sqs:GetQueueAttributes, and optionally sqs:ChangeMessageVisibility on their own queue and DLQ. Keep credentials out of code—use roles in cloud, user secrets or env vars locally.

Cost is low for this pattern, but still track it: SQS charges per million requests and SNS per million publishes. Long polling reduces empty receives. DLQs prevent hot loops that waste requests. Destroying the stack when done keeps your AWS bill predictable.

When to use fan-out vs skip it

Fan-out shines when one event triggers multiple, independent reactions with different lifecycles:

- Orders: send confirmations, notify fulfillment, update analytics—each at its own pace.

- Audit and notifications: log an action while also pushing user alerts without slowing the API.

- Side effects isolation: keep fraud checks or recommendation updates from blocking the core flow.

Consider skipping or trimming fan-out when:

- You need strict ordering and only one consumer—use a single queue or Kinesis instead.

- You have low volume and low fan-out—direct calls or a single queue may be simpler.

- You require exactly-once semantics end-to-end—fan-out can still work, but demands idempotent handlers and careful retries; sometimes a transactional outbox with one queue fits better.

If the number of subscribers explodes, add filters to keep queues focused, and monitor DLQs so noisy consumers do not hide failures.

Cleanup

When you are done experimenting, run terraform destroy from the env folder to delete the topic, queues, and subscriptions so you do not pay for idle resources.

Wrap-up

You now have a straightforward fan-out pipeline: one orders API publishes to SNS, two SQS queues receive the event, and each .NET 10 worker processes independently. Terraform keeps the infrastructure repeatable without taking over the article; the real value is the pattern—decoupled consumers, independent retries, and room to grow with filters or more subscribers. Next steps could include adding message filters for different event types, layering in exponential backoff and metrics/alarms, or switching one consumer to a Lambda for bursty workloads. Whatever you build next, keep the publish-once, consume-many mindset, and remember to destroy lab resources to keep AWS costs in check.

Once you are comfortable with this, extend it: add a payments worker that listens for OrderCreated and triggers a charge, or introduce an OrderCancelled event and send only fulfillment cancellations to the warehouse queue. Fold in distributed tracing so you can follow a single order from API publish to each consumer. The pattern stays the same—one event, many independent reactions—but your confidence grows as you add new downstream services without touching the producer.

If you want to run the exact code used here, grab it from https://github.com/iammukeshm/fanout-pattern-sns-sqs (Terraform, API, and workers). Swap in your AWS outputs and you’re ready to test the pattern end-to-end.