Setting up Terraform for the first .NET service on AWS is usually straightforward - you write a main.tf, define a handful of resources, and ship. The trouble starts when the second and third services arrive. Suddenly you have three copies of the same VPC, IAM, and tagging logic sprinkled across folders. Reviews slow down, fixes get missed, and every new environment feels like deja vu. The cure is modularization: small, focused Terraform modules that your team can drop into any workload without repeating themselves.

This guide is a gentle first step into Terraform modules for AWS, aimed at .NET teams. We will keep the patterns simple, avoid over-engineering, and stay close to the kinds of services you already deploy—specifically App Runner for containerized ASP.NET apps.

This article is sponsored by AWS. Huge thanks for helping me produce more .NET on AWS content!

If you followed my earlier Terraform posts—automating AWS infrastructure, deploying .NET Lambdas, and shipping .NET apps to App Runner—you probably have a few Terraform folders full of copy-pasted HCL. That is normal for a first pass, but it quickly leads to drift, review fatigue, and “where did this variable come from?” moments. Modules are the fix: small, reusable building blocks that keep every .NET workload on AWS consistent.

This article is intentionally simple. We are not chasing exotic patterns or overly generic modules. Instead, we will build a clean layout with two practical modules: a base network/tagging module and an App Runner service module. We will wire them together in a root configuration, keep the inputs and outputs obvious, and show you how to run plan/apply with confidence. By the end, you will have a reusable template you can adapt for the next .NET service without starting from scratch.

Before you start: catch up on the Terraform series

If you want a refresher—or you are landing on modules for the first time—these articles show the path from basics to App Runner deployments:

What we’re going to build

We will set up a predictable module layout (modules/ plus envs/) that any teammate can navigate, create a base module for a simple VPC with public subnets and consistent tags, and build an App Runner module that accepts an image, IAM role, health checks, and environment variables. A root dev stack will compose both modules, pin provider versions, and output the service URL, with a lean checklist for validation and spot checks as the module set grows.

Prerequisites

Have Terraform CLI 1.7+ installed, an AWS account with credentials via aws configure or environment variables, and a container image for your .NET app in ECR (or a public registry) similar to what we used in the App Runner article. Know the basics of Terraform state and remote backends; an S3 bucket is enough for remote state with current Terraform versions.

Why use modules on AWS

Copy-paste works until you need to fix a bug across five services or explain a plan to a reviewer. Modules bring discipline: every service inherits the same tagging, logging, and IAM posture, so changes are safer. Inputs and outputs make intent obvious, onboarding is faster, and you evolve in small steps without breaking consumers.

If you are used to ASP.NET projects with layered architectures and DI, think of modules the same way: small, testable units with a clear contract.

A simple module layout

Keep it boring and predictable: one repo, folders for modules, and a folder per environment for root stacks. A short README in each module explains inputs, outputs, and examples without forcing readers to scan HCL.

terraform-modules/ modules/ base-network/ main.tf variables.tf outputs.tf README.md app-runner-service/ main.tf variables.tf outputs.tf README.md envs/ dev/ main.tf variables.tf backend.tf outputs.tf prod/ main.tf variables.tf backend.tf outputs.tfFolder tips: keep modules under modules/ with their own variables, outputs, and README; run plan and apply from envs/{env} so state stays scoped; hold shared names and tags in root locals; and pin provider versions in root configs so upgrades are intentional.

How it works (quick mental model)

Think of the modules as Lego bricks and the envs/dev stack as the board you snap them onto. Each module has a small contract—inputs it expects and outputs it returns. The base-network module builds a VPC and subnets and hands back their IDs. The App Runner module takes a container image, environment variables, and tags, and produces a running service plus its URL. In the root stack you pass outputs from one module (tags, subnet IDs if you need them later) into another, keeping all environment-specific values—like the image URI or connection strings—in one place. Terraform reads these files, figures out the dependency graph automatically from the references, and applies changes in the right order so you do not have to script the sequence yourself.

Design principles for simple modules

Before writing HCL, set a few ground rules that keep modules understandable:

- Narrow scope: One concern per module. Network, IAM, service. If a module starts collecting unrelated flags, split it.

- Typed inputs: Use

typeandvalidationon variables. It saves review time and prevents surprising plans. - Helpful defaults: Defaults are fine for convenience, but do not hide environment-specific values inside modules. Keep env data in root stacks.

- Straightforward outputs: Export IDs and ARNs that downstream modules actually need. Avoid leaking internal resource names.

- Document quickly: A 10-line README with inputs, outputs, and an example is enough.

- Avoid magic locals: If a value matters to callers, expose it via a variable or output instead of burying it in

locals.

Building a base module (network + tagging)

App Runner can run without a VPC connector, but most teams still want a lightweight network module to standardize CIDR blocks, subnet naming, and tagging. This module creates a VPC, a pair of public subnets, and an Internet Gateway. You can extend it later with route tables or VPC connectors if you need private networking.

modules/base-network/variables.tf

variable "name" { description = "Base name for network resources" type = string}

variable "cidr_block" { description = "CIDR for the VPC" type = string default = "10.0.0.0/16"}

variable "tags" { description = "Common tags to apply" type = map(string) default = {}}Add a small validation block to catch typos early:

variable "cidr_block" { description = "CIDR for the VPC" type = string default = "10.0.0.0/16"

validation { condition = can(cidrnetmask(var.cidr_block)) error_message = "cidr_block must be a valid CIDR, e.g. 10.0.0.0/16." }}modules/base-network/main.tf

resource "aws_vpc" "this" { cidr_block = var.cidr_block enable_dns_support = true enable_dns_hostnames = true tags = merge(var.tags, { Name = "${var.name}-vpc" })}

resource "aws_subnet" "public" { count = 2 vpc_id = aws_vpc.this.id cidr_block = cidrsubnet(var.cidr_block, 4, count.index) map_public_ip_on_launch = true availability_zone_id = element(data.aws_availability_zones.available.zone_ids, count.index) tags = merge(var.tags, { Name = "${var.name}-public-${count.index + 1}" })}

data "aws_availability_zones" "available" { state = "available"}

resource "aws_internet_gateway" "igw" { vpc_id = aws_vpc.this.id tags = merge(var.tags, { Name = "${var.name}-igw" })}modules/base-network/outputs.tf

output "vpc_id" { value = aws_vpc.this.id}

output "public_subnet_ids" { value = aws_subnet.public[*].id}

output "tags" { value = var.tags}Notes:

- Two public subnets give you basic high availability. If you need private subnets later, you can extend the module without changing callers because the outputs stay stable.

- Tags flow through the module so cost allocation and reporting stay consistent across services.

- If you do not need VPC connectivity for App Runner, you can still use this module to standardize tagging for other resources (S3, SQS, DynamoDB).

Building an App Runner service module

The goal is a small, predictable module: it needs a container image, environment variables, and an IAM role that lets App Runner pull from ECR. Everything else (health check path, auto deployments) stays explicit so you can see what the service will do.

modules/app-runner-service/variables.tf

variable "name" { type = string description = "Service name"}

variable "image" { type = string description = "Container image URI (ECR or public)"}

variable "env_vars" { type = map(string) description = "Environment variables for the service" default = {}}

variable "health_check_path" { type = string description = "Path for HTTP health checks" default = "/"}

variable "port" { type = string description = "Container port" default = "8080"}

variable "tags" { type = map(string) description = "Tags to apply" default = {}}modules/app-runner-service/main.tf

data "aws_iam_policy_document" "assume_apprunner" { statement { actions = ["sts:AssumeRole"] principals { type = "Service" identifiers = ["build.apprunner.amazonaws.com"] } }}

data "aws_iam_policy_document" "ecr_access" { statement { actions = [ "ecr:GetAuthorizationToken", "ecr:BatchCheckLayerAvailability", "ecr:GetDownloadUrlForLayer", "ecr:BatchGetImage" ] resources = ["*"] }}

resource "aws_iam_role" "service" { name = "${var.name}-apprunner-role" assume_role_policy = data.aws_iam_policy_document.assume_apprunner.json tags = var.tags}

resource "aws_iam_role_policy" "ecr_pull" { name = "${var.name}-ecr-pull" role = aws_iam_role.service.id policy = data.aws_iam_policy_document.ecr_access.json}

resource "aws_apprunner_service" "this" { service_name = var.name

source_configuration { image_repository { image_identifier = var.image image_repository_type = "ECR" image_configuration { port = var.port runtime_environment_variables = var.env_vars } }

authentication_configuration { access_role_arn = aws_iam_role.service.arn }

auto_deployments_enabled = false }

health_check_configuration { protocol = "HTTP" path = var.health_check_path }

tags = var.tags}modules/app-runner-service/outputs.tf

output "service_url" { value = aws_apprunner_service.this.service_url}

output "service_arn" { value = aws_apprunner_service.this.arn}Notes:

auto_deployments_enabledisfalseso you can control deploys via CI/CD. Turn it on if you want App Runner to pull new images automatically.- Health check path is a variable so you can point it at

/healthor/readyfor ASP.NET apps that expose a health endpoint. - The IAM role is scoped to ECR pull only. If you later attach VPC connectors or Secrets Manager, add policies here rather than in the root stack to keep the contract in one place.

Wiring modules together in an environment

The root stack lives under envs/dev. It sets shared tags, consumes the base module, and then passes tags and names into the App Runner module. Keep provider versions pinned and backends explicit so teams do not collide on state.

envs/dev/backend.tf

terraform { backend "s3" { bucket = "my-terraform-state-bucket" key = "apprunner/dev/terraform.tfstate" region = "us-east-1" dynamodb_table = "terraform-locks" }}envs/dev/main.tf

terraform { required_version = ">= 1.7.0" required_providers { aws = { source = "hashicorp/aws" version = "~> 5.40" } }}

provider "aws" { region = "us-east-1"}

locals { tags = { Project = "DotNetAppRunner" Env = "dev" Owner = "platform" }}

module "network" { source = "../../modules/base-network" name = "dotnet-dev" tags = local.tags cidr_block = "10.0.0.0/16"}

module "app_runner" { source = "../../modules/app-runner-service" name = "dotnet-dev-orders" image = "123456789012.dkr.ecr.us-east-1.amazonaws.com/orders:latest" env_vars = { ASPNETCORE_ENVIRONMENT = "Development" ConnectionStrings__App = "Server=mydb;Database=orders;User Id=app;Password=secret;" } health_check_path = "/health" port = "8080" tags = local.tags}envs/dev/outputs.tf

output "app_runner_url" { value = module.app_runner.service_url}Notes:

- Keep environment-specific values in the root stack: image tags, connection strings, and names. The module stays generic.

- If you add a VPC connector later, the network module already exposes subnet IDs; the App Runner module interface can grow to accept those IDs without breaking existing consumers.

- The backend uses an S3 bucket for remote state. If you are experimenting locally, you can use the default local backend, but teams should still share an S3 bucket to avoid drift.

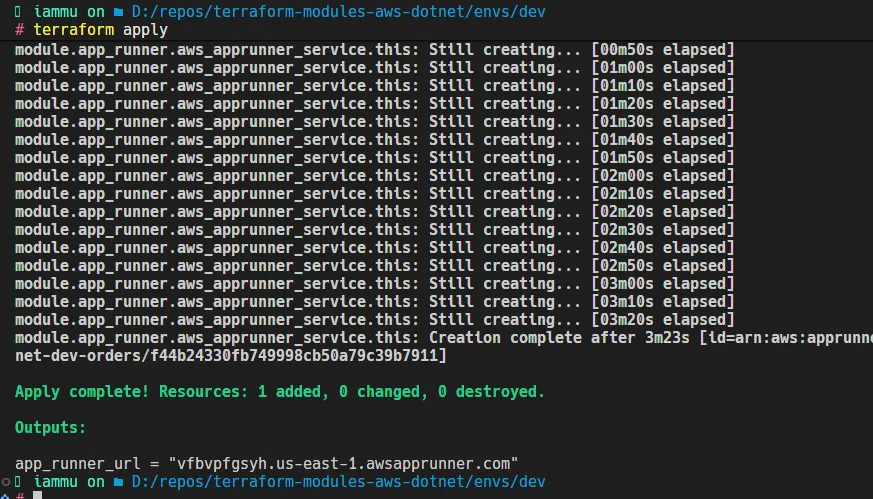

Running the stack

Before you run anything, create an S3 bucket for Terraform state (for example my-terraform-state-bucket) and update envs/dev/backend.tf with that bucket name. Also set the App Runner image URI in envs/dev/main.tf to your own ECR image. Then from envs/dev, run terraform fmt, terraform init, terraform validate, terraform plan, and finally terraform apply. Formatting keeps files consistent, init pulls the provider and backend, validate catches missing variables early, plan is your review artifact (treat it like a PR preview), and apply should only follow a plan you have read and approved.

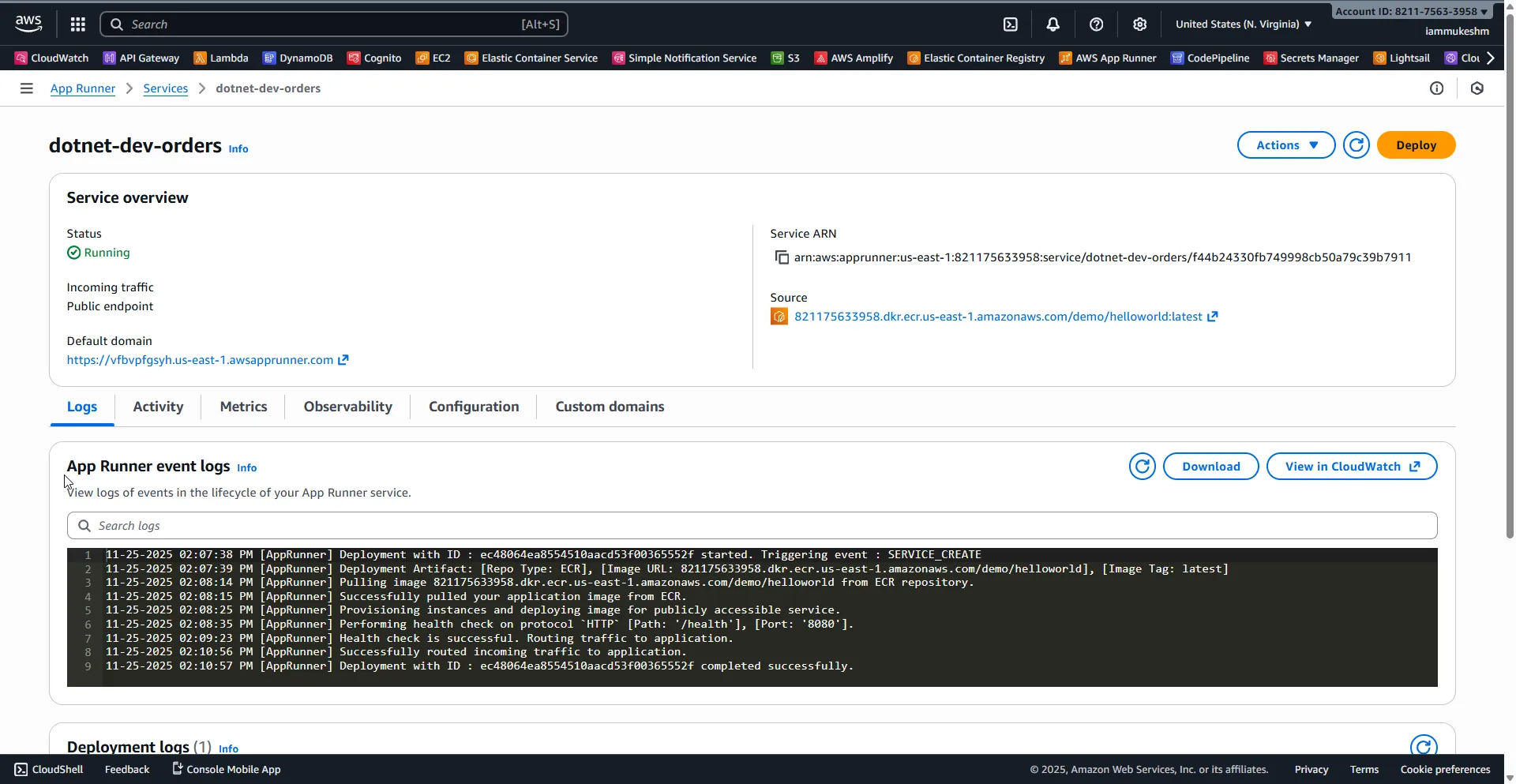

Testing and checking results

After apply, run terraform output app_runner_url to grab the service URL. In the AWS console, confirm App Runner shows Running with healthy checks hitting your /health endpoint. If you added a VPC connector, verify it is Active and that the service can reach private resources via logs or a quick HTTP call. Check CloudWatch logs to ensure the .NET app booted with the expected environment variables. Finally, hit the URL in a browser or with curl:

curl -i https://xyz123.awsapprunner.com

As you can see, the app runner is up and running successfully.

Common pitfalls to avoid

Avoid over-generic modules—if you see dozens of optional flags, split the scope. Keep secrets and environment-specific values in root stacks or CI/CD, not inside modules. Pin provider and module versions to dodge surprise upgrades. Pass IDs and ARNs via outputs instead of referencing resources by name. Apply only from envs/{env} so state stays clean, and keep a short README per module so future you does not reread HCL.

Growing the pattern (without making it complex)

Once the basics feel solid, extend gradually. Add a modules/ssm-parameters or modules/secrets-manager module and pass parameter names into the App Runner module instead of baking secrets into env_vars. Create a small module for CloudWatch alarms or dashboards, pin key metrics, and tag alarms for ownership. In CI/CD (GitHub Actions or Azure DevOps), run fmt/validate/plan on PRs and apply on main, using per-env folders or workspaces to keep state separate. Spin up additional App Runner instances in the same root stack with consistent tags and shared network outputs. Use validation blocks and sensitive = true on inputs like connection strings to keep plans clean.

Quick README template for modules

Each module can carry a lightweight README to speed up reviews. Keep it this short; it is enough context for a reviewer to understand intent without digging through every resource:

# app-runner-service

Creates an AWS App Runner service backed by an ECR image with a role for image pulls.

## Inputsname (string, required): Service name.image (string, required): Image URI (ECR).env_vars (map(string), optional): Environment variables.health_check_path (string, optional): Defaults to "/".port (string, optional): Defaults to "8080".tags (map(string), optional): Common tags.

## Outputsservice_url: Public URL of the App Runner service.service_arn: ARN of the App Runner service.

## Examplemodule "app_runner" { source = "../../modules/app-runner-service" name = "dotnet-dev-orders" image = "123456789012.dkr.ecr.us-east-1.amazonaws.com/orders:latest" env_vars = { ASPNETCORE_ENVIRONMENT = "Development" } health_check_path = "/health" tags = local.tags}Recap: why this works for .NET teams

The layout is predictable, like a clean .NET solution, so it is obvious where to add or change infrastructure pieces. Modules keep contracts small—network, service, IAM—without turning into frameworks, which makes reuse easy: copy envs/dev, swap the image and env vars, and move on. Inputs and outputs stay stable, so you can refactor internals without breaking consumers.

Looking for a larger example?

If you want to see a production-scale Terraform setup, check the terraform folder in the fullstackhero starter kit: https://github.com/fullstackhero/dotnet-starter-kit/tree/develop/terraform. It bootstraps remote state with S3, then composes richer modules for VPC, ALB, ECS cluster/services, RDS Postgres, ElastiCache Redis, and S3 buckets under modules/, and wires them together per environment/region under envs/dev|staging|prod/<region>. The modules/app_stack/main.tf file shows the pattern: create network and ECS cluster modules, attach an ALB, stand up RDS and Redis, and deploy two ECS services (API and Blazor) with health checks and path-based routing:

module "network" { source = "../network"; name = "${var.environment}-${var.region}"; cidr_block = var.vpc_cidr_block; public_subnets = var.public_subnets; private_subnets = var.private_subnets; }module "ecs_cluster" { source = "../ecs_cluster"; name = "${var.environment}-${var.region}-cluster" }module "alb" { source = "../alb"; vpc_id = module.network.vpc_id; subnets = module.network.public_subnet_ids; certificate_arn = var.certificate_arn }module "rds" { source = "../rds_postgres"; vpc_id = module.network.vpc_id; subnets = module.network.private_subnet_ids; username = var.db_username; password = var.db_password }module "redis" { source = "../elasticache_redis"; vpc_id = module.network.vpc_id; subnet_ids = module.network.private_subnet_ids }module "api_service" { source = "../ecs_service"; cluster_arn = module.ecs_cluster.arn; listener_arn = module.alb.listener_arn; path_patterns = [\"/api/*\"]; environment_variables = { ASPNETCORE_ENVIRONMENT = var.environment; DatabaseOptions__ConnectionString = module.rds.endpoint; CachingOptions__Redis = \"${module.redis.primary_endpoint_address}:6379\" } }module "blazor_service" { source = "../ecs_service"; cluster_arn = module.ecs_cluster.arn; listener_arn = module.alb.listener_arn; path_patterns = [\"/*\"]; environment_variables = { ASPNETCORE_ENVIRONMENT = var.environment; Api__BaseUrl = \"http://${module.alb.dns_name}\" } }It is the same Lego-brick idea as this article, just with more pieces for a full stack.

If you want to pull the code from this article, the full source for the base network and App Runner modules plus the dev stack lives at https://github.com/iammukeshm/terraform-modules-aws-dotnet.

Wrap-up and next steps

You now have a simple, repeatable module layout: base infrastructure plus an App Runner service module that any .NET teammate can consume. The structure keeps tags, IAM, and service settings consistent while making refactors safer. From here, add a secrets/config module (SSM or Secrets Manager) and pass parameter names into the App Runner module, extend the App Runner module with scaling or VPC connectors as needed, mirror the pattern for ECS or Lambda, and plug root stacks into CI/CD so fmt/validate/plan run on pull requests and apply runs on main. When you are ready for the next level, revisit the earlier Terraform articles, refactor those stacks into modules, and keep the contracts as tight as you keep your .NET APIs. Let me know which part you want to deepen next—observability modules or secrets management are both natural follow-ups.

One last reminder: always clean up after experiments. When you are done exploring, run terraform destroy from your env folder to tear down the resources and keep AWS costs under control.