DynamoDB Streams offer a powerful way to capture real-time changes in your DynamoDB tables, enabling event-driven architectures, auditing, replication, and more. In this guide, I will walk you through everything .NET developers need to know to get started with DynamoDB Streams.

You’ll learn how DynamoDB Streams work, their key benefits, and how to process stream records efficiently using AWS SDK for .NET. We’ll cover common use cases, setting up a Lambda function to consume stream events, and best practices for handling updates, inserts, and deletes in a scalable way.

By the end of this guide, you’ll have a solid foundation for integrating DynamoDB Streams into your .NET applications, unlocking real-time data processing capabilities to enhance your architecture. Whether you’re building event-driven microservices, syncing data across systems, or implementing change tracking, this article will provide the step-by-step guidance you need to get started quickly.

This article is sponsored by AWS. Huge thanks for helping me produce more .NET on AWS content!

What are DynamoDB Streams?

DynamoDB Streams is a powerful feature that captures a time-ordered sequence of item-level changes in a DynamoDB table and makes them available for real-time processing. Whenever an item in the table is inserted, updated, or deleted, a corresponding event is written to the stream. These events can then be consumed by other AWS services, such as AWS Lambda or Amazon Kinesis, to trigger actions based on data changes.

Each event in the stream contains before and after images of the modified item, depending on the selected stream view. This allows applications to react dynamically to changes in data.

Why Use DynamoDB Streams?

DynamoDB Streams enable real-time event-driven architectures, allowing developers to build highly responsive applications that react instantly to data changes. By capturing every modification—whether an item is inserted, updated, or deleted—DynamoDB Streams provide a seamless way to trigger workflows and automate processes.

One of the key advantages of DynamoDB Streams is event-driven processing. Instead of continuously polling a database for changes, applications can automatically trigger AWS Lambda functions whenever an update occurs. This makes it easier to process transactions, send notifications, or integrate with other AWS services without unnecessary overhead.

For businesses requiring audit logging, DynamoDB Streams serve as a reliable way to maintain a complete history of data changes. This is particularly useful for compliance, debugging, and tracking user activity, ensuring that every modification is recorded and easily retrievable.

Another crucial use case is cross-region replication. With DynamoDB Streams, developers can synchronize data across multiple AWS regions, ensuring high availability and disaster recovery. This is especially valuable for global applications that need consistent and up-to-date data access.

Beyond replication, DynamoDB Streams enhance analytics and monitoring by allowing seamless integration with services like Amazon OpenSearch or AWS Glue. By streaming real-time data changes, businesses can gain valuable insights, detect anomalies, and improve operational efficiency.

Additionally, DynamoDB Streams play a vital role in caching strategies. When data in a database changes, outdated cache entries can lead to inconsistencies. By using Streams, applications can automatically invalidate or update caches, ensuring users always receive the most accurate and up-to-date information.

Common Use Cases for DynamoDB Streams

DynamoDB Streams is widely used across various domains. Here are some common scenarios:

🔹 Real-time Notifications – Send alerts when an item changes (e.g., order status updates in e-commerce).

🔹 Data Synchronization – Keep multiple services in sync by processing changes in near real-time.

🔹 Replication Across Regions – Maintain a global data store with automatic updates.

🔹 Change Data Capture (CDC) – Capture database changes for downstream processing.

🔹 Event Sourcing – Track every state change for audit trails and debugging.

With DynamoDB Streams, developers can build scalable, event-driven applications without polling the database, reducing operational overhead and improving system responsiveness.

How DynamoDB Streams Work

DynamoDB Streams captures item-level modifications in a DynamoDB table and stores them as a sequence of events. These events are written in the order they occur and stored for up to 24 hours. Applications can then consume these events in real-time to trigger actions, synchronize data, or analyze changes.

How Data Changes are Captured

Whenever an insert, update, or delete operation occurs on a DynamoDB table, a corresponding stream record is created. These records contain information about the change, including:

✅ Event Type – INSERT, MODIFY, or REMOVE

✅ Timestamp – When the change occurred

✅ Primary Key – Identifies the affected item

✅ Before & After Images – Based on the selected stream view

These records are delivered to consumers such as AWS Lambda, Kinesis, or a custom polling service, enabling real-time processing of data changes.

Stream Views in DynamoDB Streams

DynamoDB Streams allows you to configure how much data is recorded in stream events. There are four stream view types, each offering a different level of detail:

1️⃣ Keys Only – Records only the primary key (and sort key) of changed items (minimal data).

2️⃣ New Image – Stores the new version of the item after a change.

3️⃣ Old Image – Stores the previous version of the item before a change.

4️⃣ Both Images – Captures both old and new versions, useful for detailed change tracking.

| Stream View Type | Contains | Use Case |

|---|---|---|

| Keys Only | Primary Key | Basic change detection, minimal storage |

| New Image | After-change item | Syncing latest data to another system |

| Old Image | Before-change item | Auditing deleted/modified data |

| Both Images | Before & After | Full change tracking, analytics |

Data Flow in DynamoDB Streams

Here’s how DynamoDB Streams integrates with your application:

1️⃣ Table Change – A new item is added, modified, or deleted in DynamoDB.

2️⃣ Stream Record Created – DynamoDB generates a stream event based on the selected stream view.

3️⃣ Event Processing – The event is read by a consumer (AWS Lambda, Kinesis, etc.).

4️⃣ Action Triggered – The consumer takes action, such as updating a cache, notifying users, or synchronizing data.

Example Stream Record (Modify Event)

Here’s what a DynamoDB Stream event looks like when an item is updated:

{ "eventID": "1", "eventName": "MODIFY", "dynamodb": { "Keys": { "UserId": { "S": "12345" } }, "OldImage": { "UserId": { "S": "12345" }, "Balance": { "N": "100" } }, "NewImage": { "UserId": { "S": "12345" }, "Balance": { "N": "150" } } }}🔹 The above event indicates that UserId 12345 had their Balance updated from 100 to 150.

Processing DynamoDB Streams

There are multiple ways to consume and process DynamoDB Streams:

✅ AWS Lambda – The easiest way to process stream events serverlessly.

✅ Amazon Kinesis – For high-volume stream processing with multiple consumers.

✅ Custom Polling – Using the AWS SDK to read stream records manually.

What We’ll Build

In this demonstration, we will build a simple Web API that can perform CRUD Operations against a DDB table, and can trigger a Lambda whenever data changes within the table.

CRUD Web API

I am going to reuse the CRUD API that we had built in a previous article, CRUD with DynamoDB in ASP.NET Core - Getting Started with AWS DynamoDB Simplified. I have upgraded this source code to .NET 8 and used Minimal APIs.

This basically provides endpoints to create, retrieve, update, and delete students using Minimal APIs and Dependency Injection for a clean architecture. The API ensures efficient data handling through IDynamoDBContext, making it scalable and serverless-friendly. Students are uniquely identified by an id, and key details like firstName, lastName, class, and country are stored. The API supports RESTful operations, returning appropriate status codes for better client interaction.

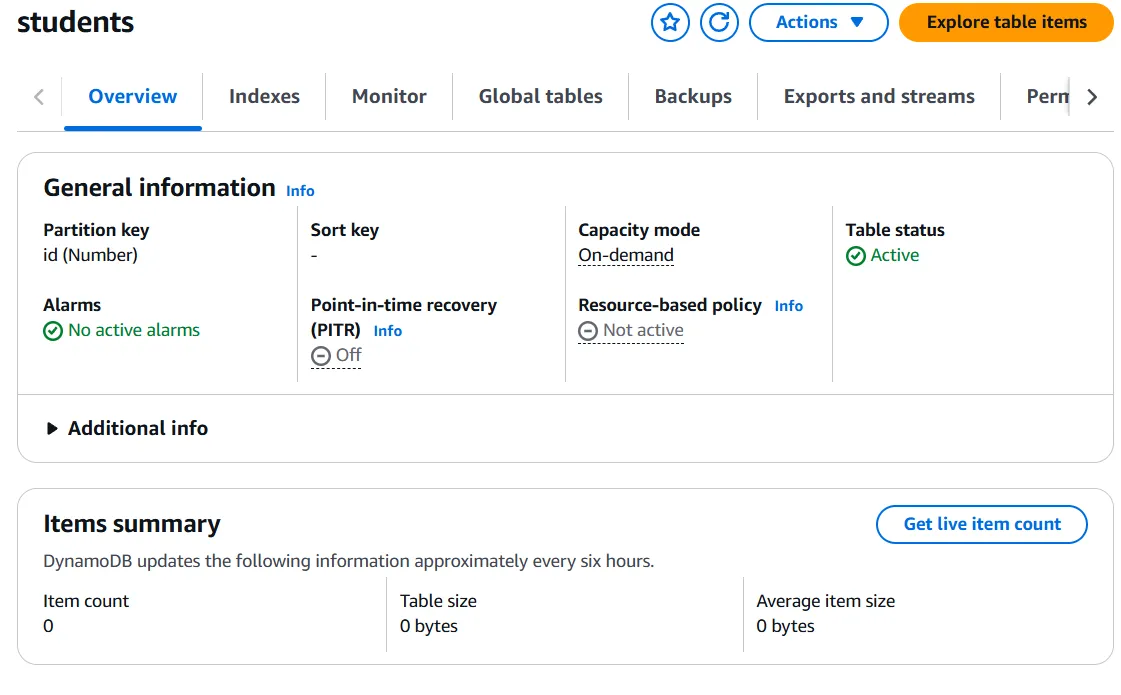

I have also gone forward and created a “students” table on Amazon DDB with “id” as the Primary Key.

AWS Lambda - DDB Change Handler

To make our system more event-driven, we will integrate AWS Lambda with our DynamoDB table. Whenever data changes within the table (Insert, Update, or Delete operations), a DynamoDB Stream will trigger a Lambda function. This Lambda function can be used for various purposes, such as:

- Real-time data processing (e.g., logging changes, analytics)

- Triggering notifications (e.g., sending emails when a student record is created)

- Data synchronization (e.g., updating a cache or another database)

To achieve this, we will have to first create an AWS Lambda that can be triggered whenever data in the DDB table changes.

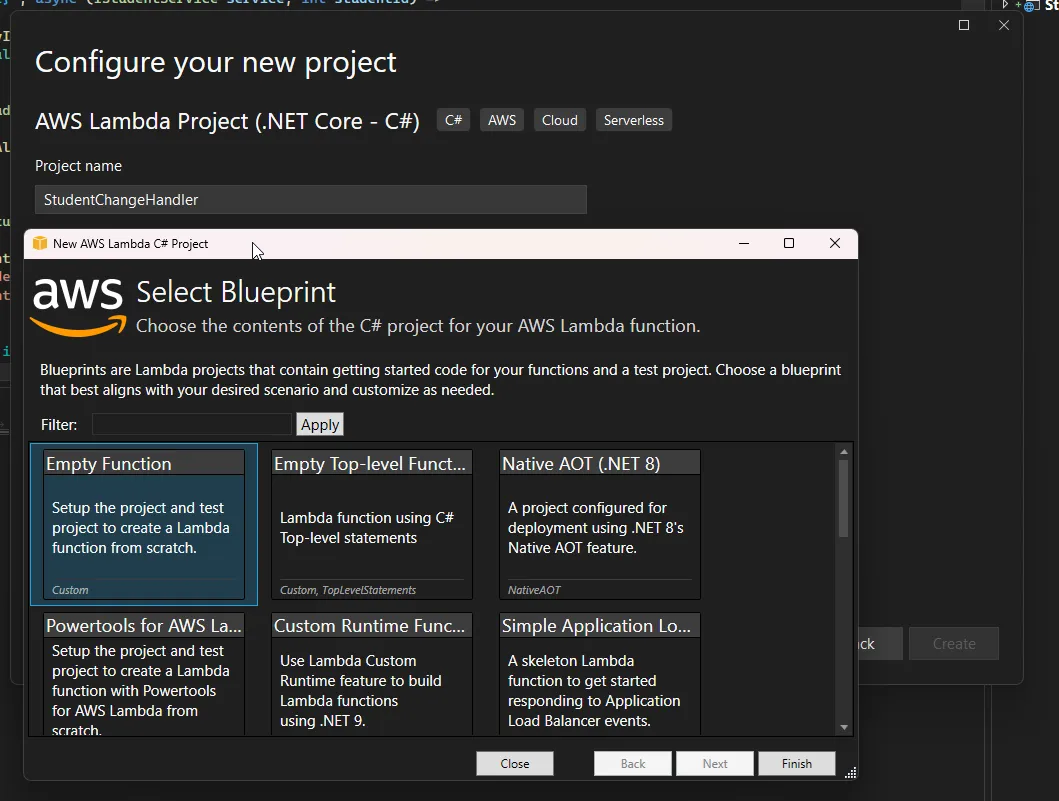

Let’s first create an AWS Lambda C# Project.

I will name my project as “StudentChangeHandler”, and from the Blueprint Selection, I will go with an Empty Function.

Next, install the Amazon.Lambda.DynamoDBEvents package, which provides built-in support for handling DynamoDB stream events in AWS Lambda.

Run the following command in your terminal or NuGet Package Manager Console:

Install-Package Amazon.Lambda.DynamoDBEventsThis package will allow our Lambda function to process changes in the DynamoDB table efficiently.

Now, let’s move on to implementing the Lambda handler!

public class Function{ public void FunctionHandler(DynamoDBEvent ddbEvent, ILambdaContext context) { context.Logger.LogInformation($"Processing {ddbEvent.Records.Count} Records.");

foreach (var record in ddbEvent.Records) { context.Logger.LogInformation($"Event Type : {record.EventName}");

var oldImage = record.Dynamodb.OldImage.ToJson(); context.Logger.LogInformation($"Old Student Record : {oldImage}");

var newImage = record.Dynamodb.NewImage.ToJson(); context.Logger.LogInformation($"New Student Record : {newImage}"); }

context.Logger.LogInformation("Processing Completed."); }}The given C# function is an AWS Lambda handler designed to process DynamoDB Streams events. When a change occurs in a DynamoDB table, this function is triggered, receiving a DynamoDBEvent object that contains details of the modifications. It begins by logging the number of records being processed. Then, it iterates through each record in the event and logs the type of event, such as INSERT, MODIFY, or REMOVE, which indicates whether a new record was added, an existing record was updated, or an entry was deleted.

For each record, it retrieves the old and new versions of the item from DynamoDB using record.Dynamodb.OldImage and record.Dynamodb.NewImage. These attributes hold the state of the item before and after the change, respectively. The function converts both images to JSON using .ToJson() and logs them for debugging or auditing purposes. Finally, after processing all records, it logs a completion message, ensuring that the function execution is traceable and the event changes are properly recorded.

Another point to consider is that the number of records triggering this Lambda function can be controlled. By default, AWS Lambda processes one record per invocation, but this behavior can be modified using the BatchSize parameter in the event source mapping configuration.

Increasing the batch size allows Lambda to process multiple records in a single execution, improving efficiency and reducing invocation costs. However, larger batch sizes may also lead to increased execution time, which could affect performance and error handling. If an error occurs while processing a batch, Lambda can either retry the entire batch or use partial batch responses to reprocess only the failed records. This provides flexibility in handling failures without duplicating successful operations.

Proper tuning of batch size and error handling strategies is essential for optimizing DynamoDB Streams processing in Lambda.

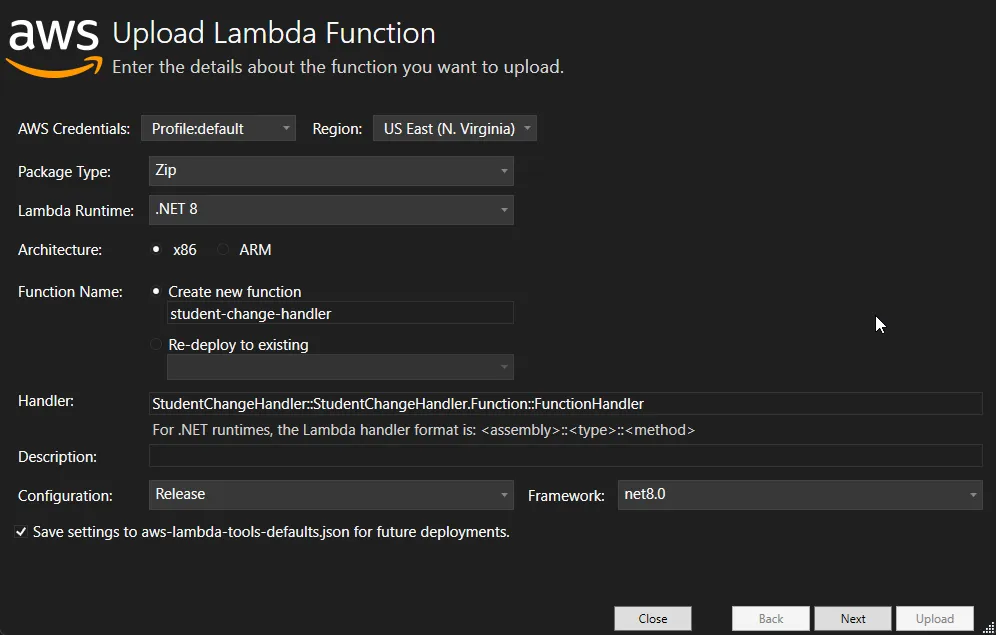

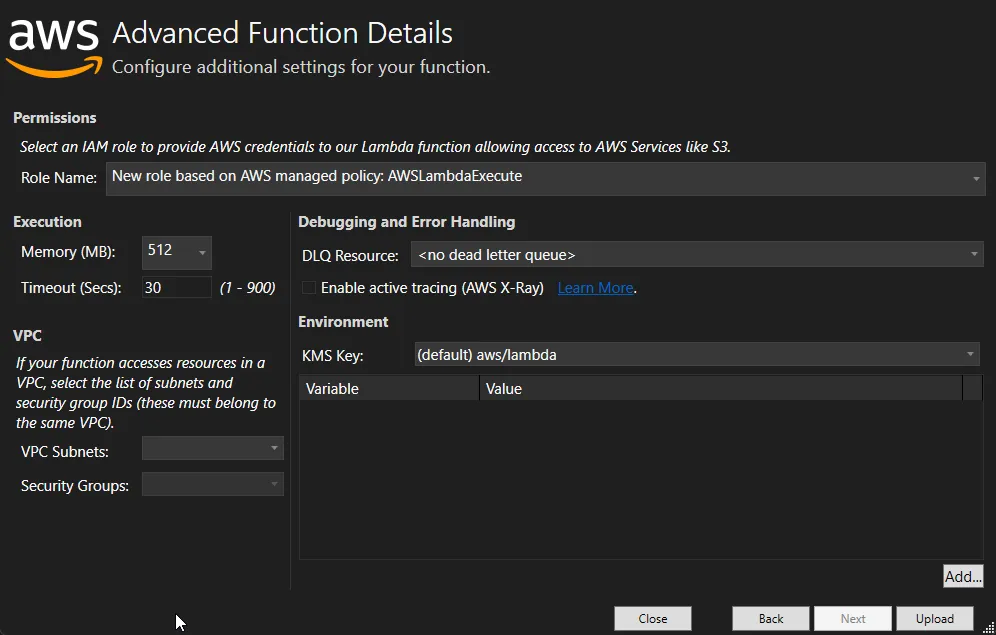

Let’s upload this Lambda. On Visual Studio, Righ Click the Lambda project and click on Publish to AWS Lambda.

I have selected the Lambda Role as BasicExecution. But, later on we will have to give it permissions to DDB, so that our Lambda will be triggered as expected. DynamoDB Streams does not automatically send data to your Lambda function. Instead, Lambda polls the stream for changes. DynamoDB itself doesn’t need extra permissions to work with Lambda. It only exposes the stream, and it’s up to Lambda (or other consumers) to pull data from it.

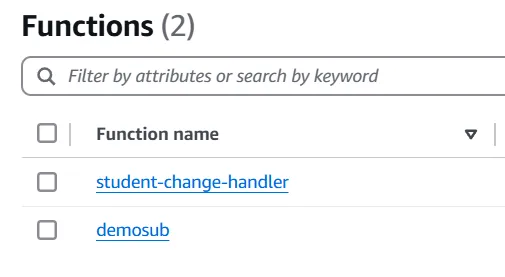

As you can see, our Change Handler Lambda is now uploaded.

Next, we will have to enable DDB Streams on our students table, and add a trigger for the Lambda to be executed whenever there is a change in the data.

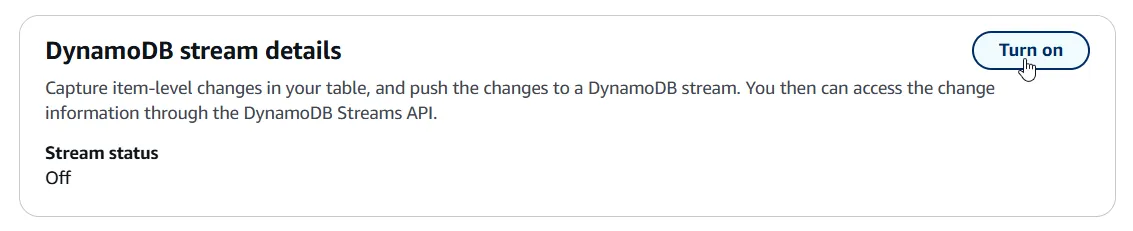

Enabling Amazon DDB Streams

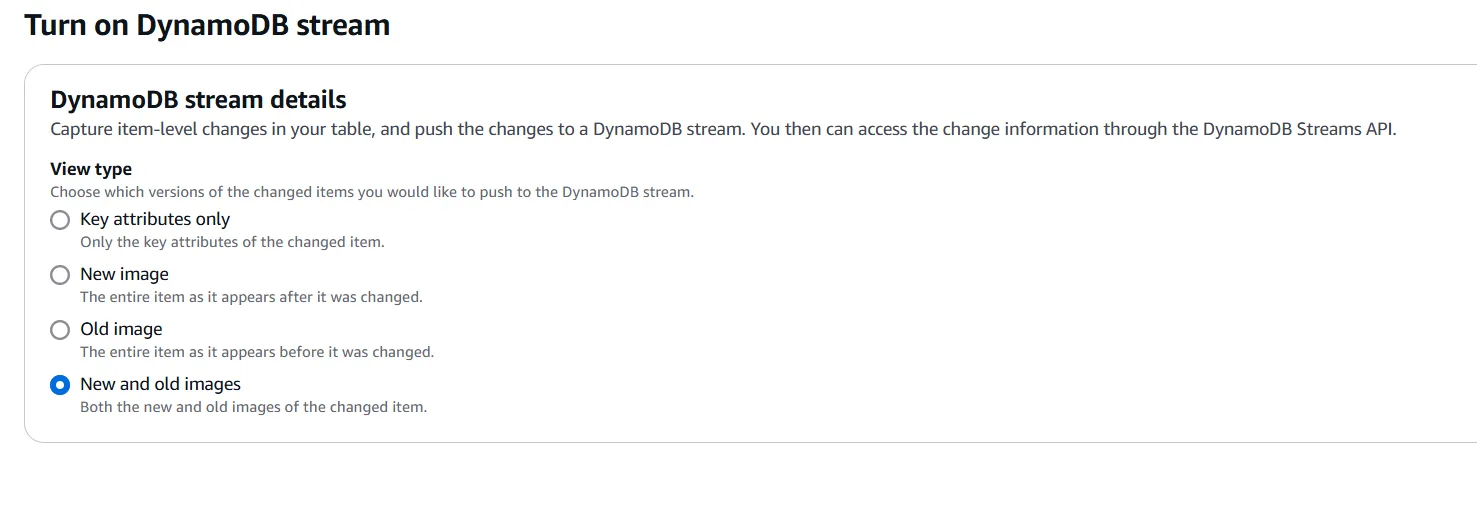

Let’s enable DDB Streams now. Navigate to the AWS Management Console and go to Amazon DynamoDB. Click on the “students” table, and switch to the “Exports and Streams” tab. Click on “Turn On”.

As mentioned earlier, here you can select the type of stream record you want your table to emit. For now, we will include both the new and old image of the changed item.

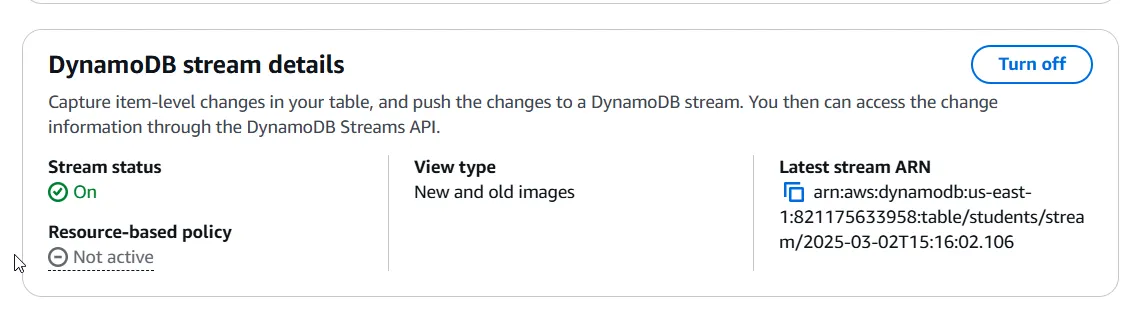

With that done click on “Turn on stream”.

Create Lambda Trigger

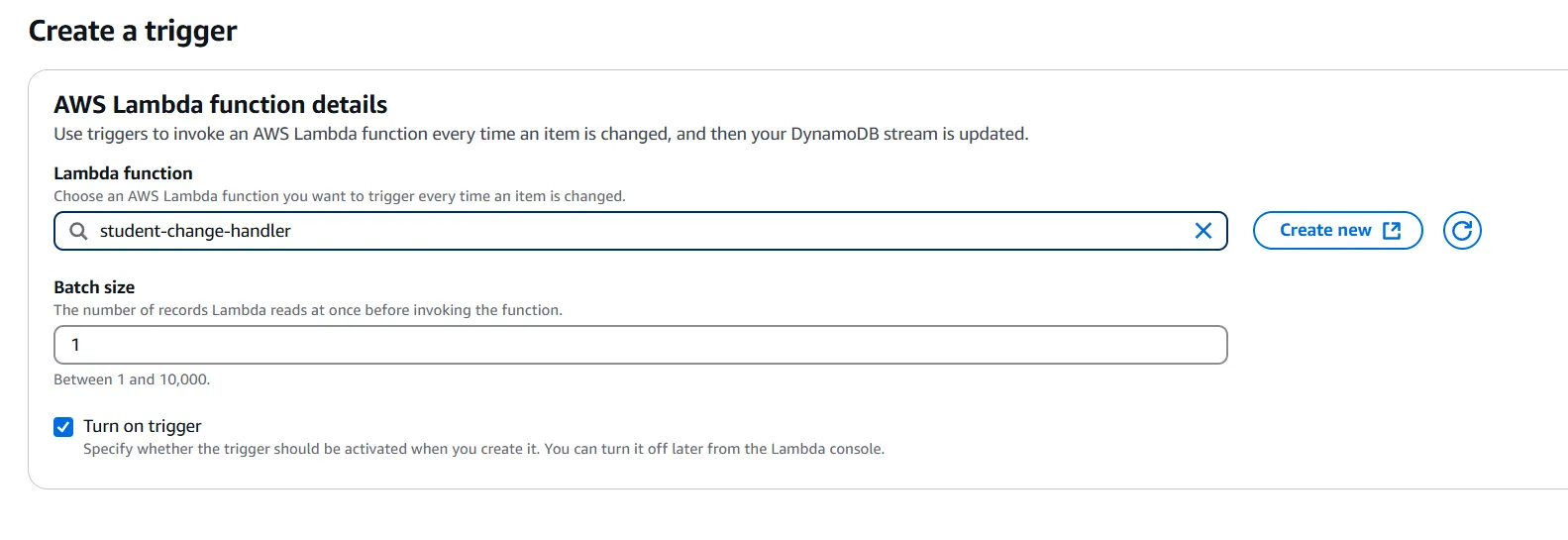

Next we need to add a trigger to invoke the AWS Lambda Function whenever an item is changed. Under the Trigger section in the same screen, click on “Create Trigger”.

Here, select the uploaded Lambda, which in our case is the “student-change-handler”. You can also specify the batch size. The default is set to 1, meaning that this Lambda will be triggered everytime a record is updated / modified / created. Sometimes it’s better to keep this size to something higher, so that you can have effecient Lambda calls.

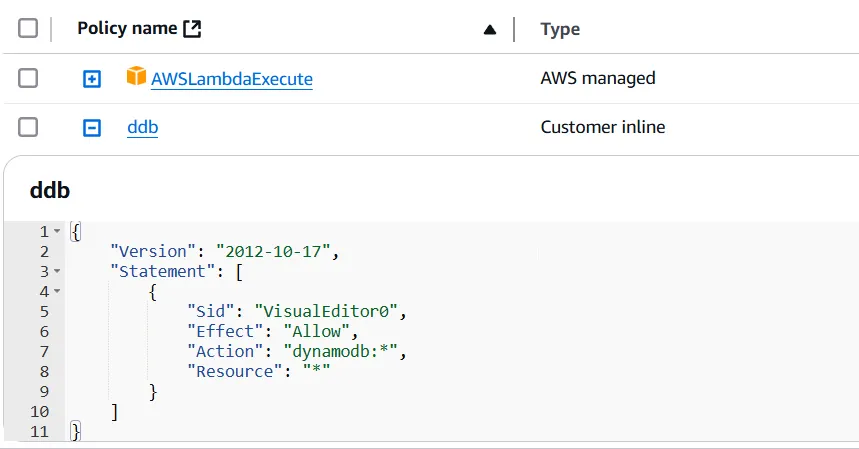

You will probably hit a permissions issue while creating this Lambda Trigger association. To fix this, open up the Lambda, then Configurations Tab, in Permissions, click on the associated Role. To this role you have to attach the DDB Permissions.

For demonstration purposes I have added all the permissions related to DDB to this role. But you will need only certain permissions as specified in the error you got while creating the trigger.

Now go back, and try creating the Lambda Trigger once again. This time around you will be able to create the integration as expected.

That’s everything you have to do! Let’s test our integration.

Testing

I will build and run my WebAPI. Once that’s up, open up Postman.

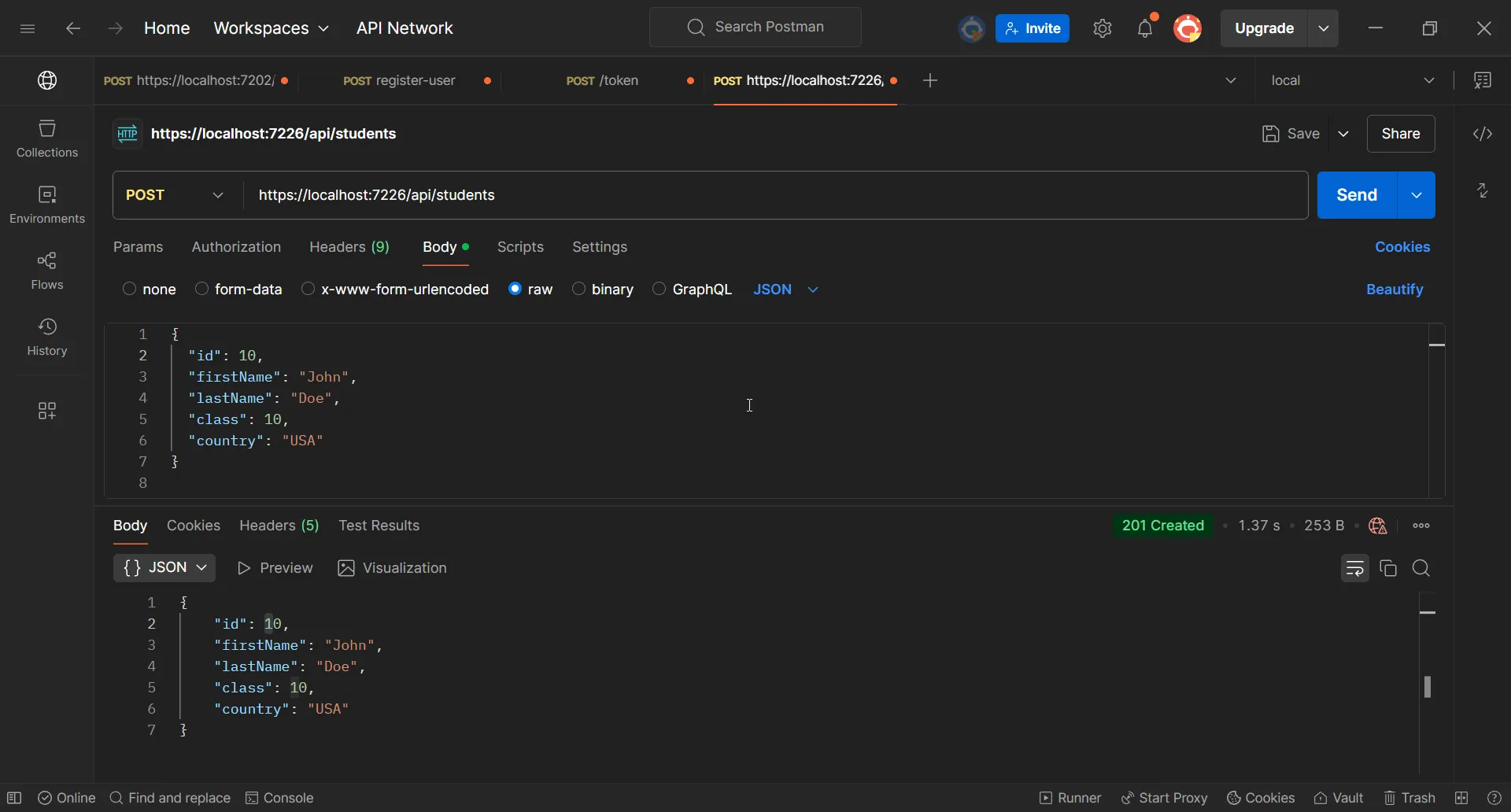

Here is the sample request that I will send to the /students API endpoint.

{ "id": 10, "firstName": "John", "lastName": "Doe", "class": 10, "country": "USA"}

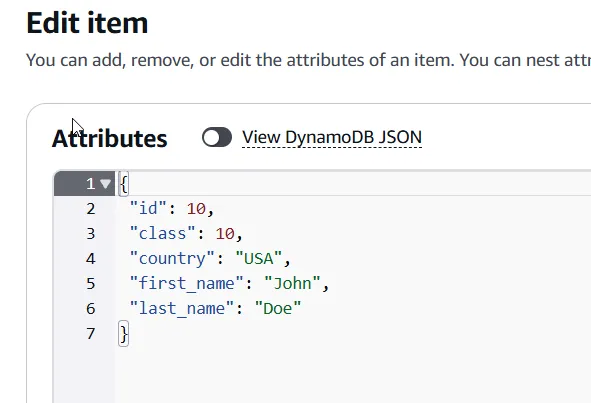

As you can see, we are getting back a 201 Created Status code, which means that a new Student Record has been created onto the students table.

If our setup worked, this INSERT operation would have triggered the AWS Lambda Function too, which would in turn write the student details onto CloudWatch Logs.

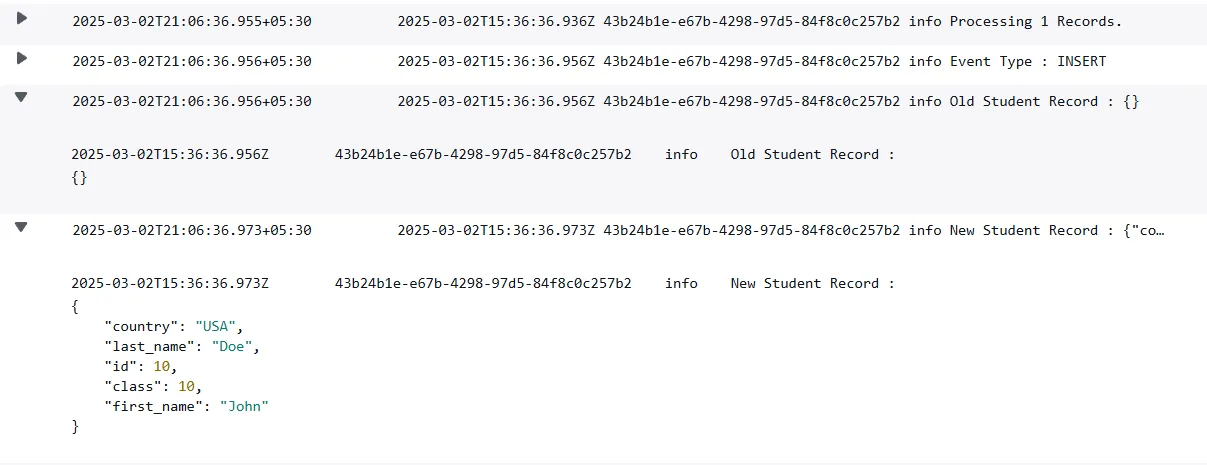

Let’s navigate to the AWS Lambda, the Monitor Tab, and click on “View CloudWatch Logs”.

And there you go! We have the Event Type printed, which is the INSERT operation, and also the old and new student record post and prior to the change.

Feel free to test out the Update and Delete scenarios, and how our Lambda would react to it.

Wrapping Up

In this demonstration, we successfully built a DynamoDB-backed CRUD Web API using ASP.NET Core Minimal APIs and integrated AWS Lambda with DynamoDB Streams to enable real-time event processing.

By following these steps, we achieved:

✅ A fully functional CRUD API using DynamoDB.

✅ An event-driven architecture with Lambda triggering on data changes.

✅ Real-time logging of changes in CloudWatch Logs.

You can find the complete source code of this implementation at the bottom of this article.

This setup can be further extended to send notifications, update caches, trigger workflows, or even sync data across services. Stay tuned for more advanced AWS and .NET tutorials! 🚀