Batch operations in Amazon DynamoDB allow developers to efficiently perform multiple read, write, update, and delete actions in a single request, optimizing performance and reducing costs. In this article, we’ll explore how to implement batch operations using DynamoDBContext in the AWS .NET SDK. By building a simple .NET web API, you’ll gain hands-on experience working with DynamoDB batch operations in a practical context.

We’ll be setting up a .NET project, configuring AWS DynamoDB, and integrating the AWS SDK for .NET. From there, we’ll implement batch read operations to retrieve multiple items, batch write operations for inserting data efficiently, and batch delete and update operations to manage records seamlessly. Along the way, we’ll cover best practices to help you build robust and scalable solutions. Whether you’re new to Amazon DynamoDB or looking to deepen your knowledge, this guide has everything you need to get started!

The core pain point this tries to solve is to improve the latency caused while performing database operations, ultimately improve the system performance.

This article is sponsored by AWS. Huge thanks for helping me produce more .NET on AWS content!

PreRequisites

- .NET 8 SDK Installed

- AWS Account, even a FREE Tier account is enough.

- Visual Studio IDE

- Development Machine Authenticated to work with AWS Resources - Here is how I configured my machine via AWS CLI Profile to stay authenticated to my AWS Account.

- Familiar with Amazon DynamoDB - Read this article

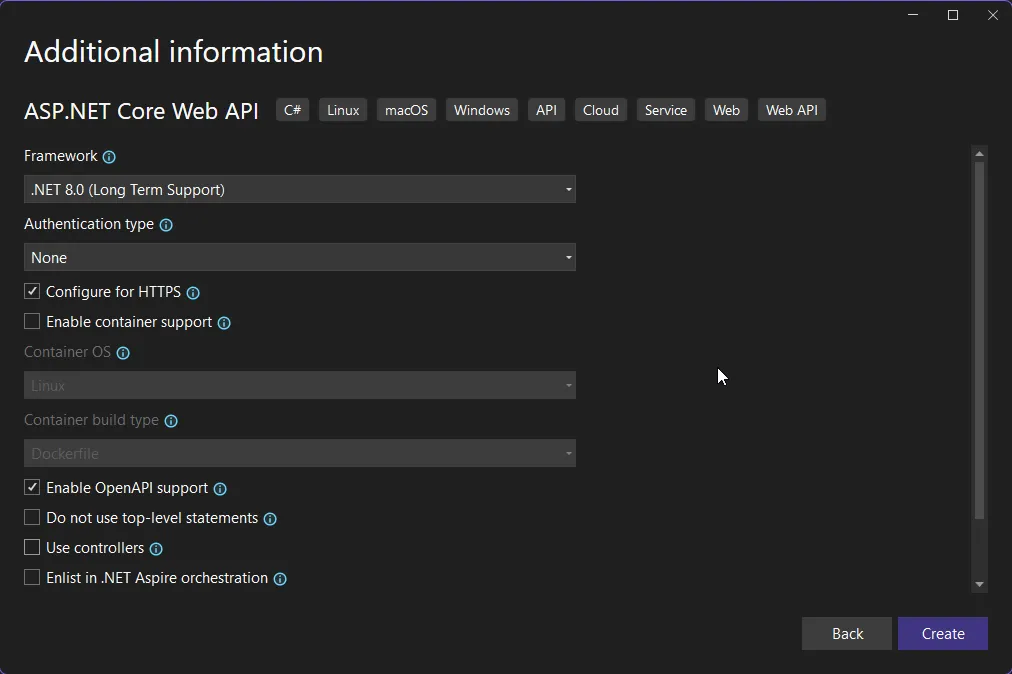

Setting up the .NET WebAPI Solution

Open up Visual Studio IDE, and create a new ASP.NET Core 8 Web Api Project.

First up, let’s install the required NuGet packages.

Install-Package AWSSDK.CoreInstall-Package AWSSDK.DynamoDBv2Install-Package AWSSDK.Extensions.NETCore.SetupOnce the packages are installed, let’s register the AWS Services within out application’s DI Container. Open up Program.cs and add in the following.

builder.Services.AddAWSService<IAmazonDynamoDB>();builder.Services.AddScoped<IDynamoDBContext, DynamoDBContext>();This will register both IAmazonDynamoDB and IDynamoDBContext.

Next, up create a new folder called Models and add in the following classes.

[DynamoDBTable("products")]public class Product{ [DynamoDBHashKey("id")] public Guid Id { get; set; } [DynamoDBProperty("name")] public string Name { get; set; } = default!; [DynamoDBProperty("description")] public string Description { get; set; } = default!; [DynamoDBProperty("price")] public decimal Price { get; set; }

public Product() {

}

public Product(string name, string description, decimal price) { Id = Guid.NewGuid(); Name = name; Description = description; Price = price; }}[DynamoDBTable("audits")]public class Audit{ [DynamoDBHashKey("id")] public Guid Id { get; set; } [DynamoDBRangeKey("product_id")] public Guid ProductId { get; set; } [DynamoDBProperty("action")] public string Action { get; set; } = default!; [DynamoDBProperty("time_stamp")] public DateTime TimeStamp { get; set; } public Audit() {

}

public Audit(Guid productId, string action) { Id = Guid.NewGuid(); ProductId = productId; Action = action; TimeStamp = DateTime.UtcNow; }}Our domain classes are simple. A Product class that has the Id, Name, Description and Price. Also we will have a Audit table for Products which will have the related ProductId, Action, and TimeStamp.

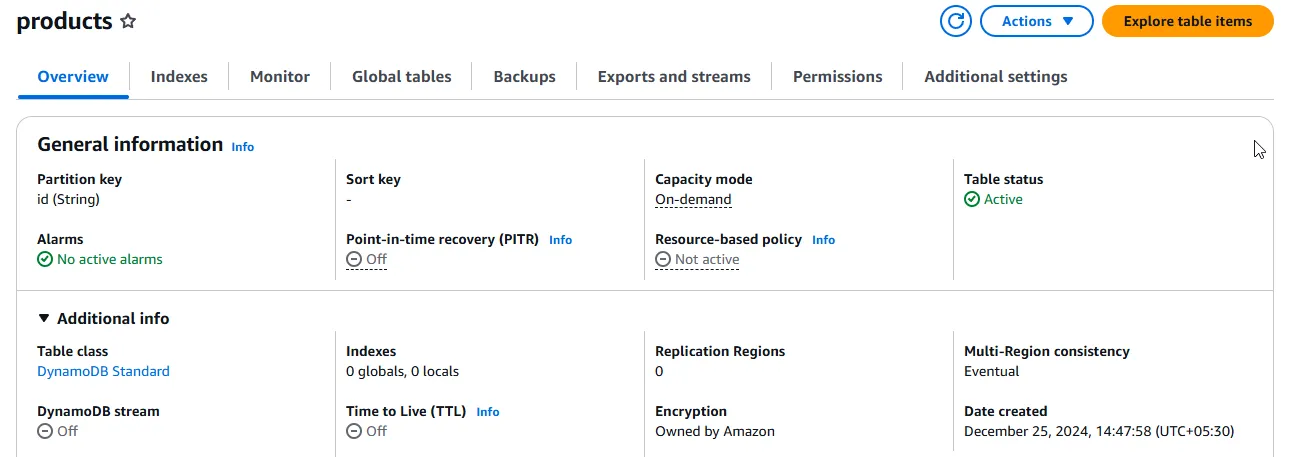

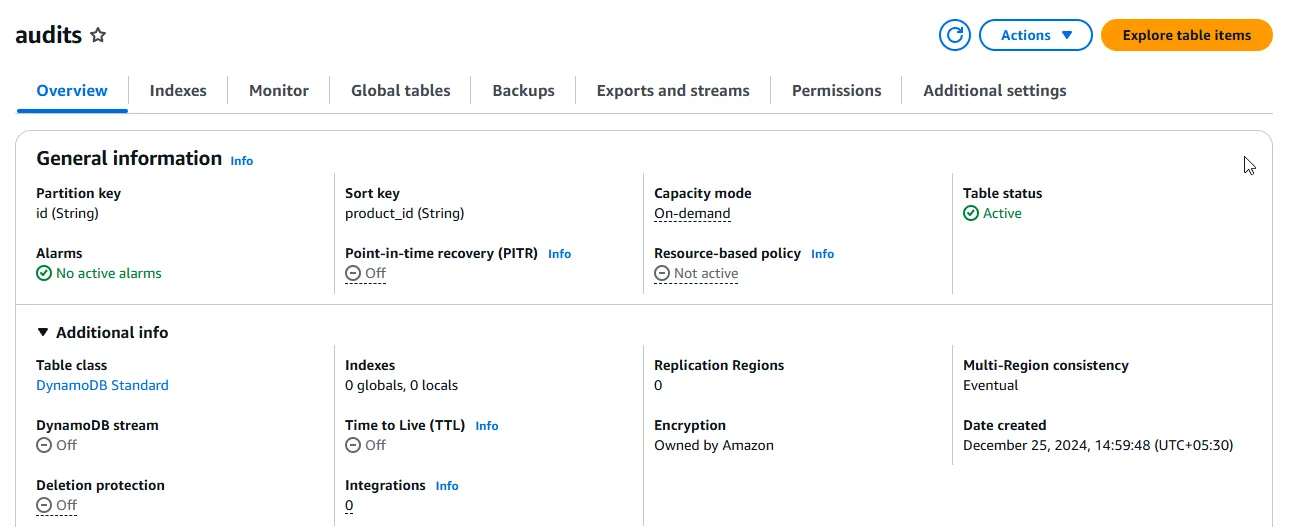

Navigate to AWS Management Console, and create 2 tables as below.

For the products table, the partition key will be id(string).

And for the audits table, the partition key will be id(string) and the sort key will product_key(string).

So, now we have everything in place. Let’s build some Minimal API endpoints to demonstrate batch operations in DynamoDB with .NET using the AWS SDK.

DynamoDB Batch Write Operations

Batch write operations in DynamoDB are used to perform multiple PutItem or DeleteItem actions in a single request. This approach is efficient for bulk data modifications, such as importing large datasets or cleaning up unused records. Each BatchWriteItem request can handle up to 25 items or 16 MB of data, whichever comes first.

Batch writes are ideal when you need to insert or delete multiple items quickly while minimizing network overhead. However, they are not transactional, meaning partial failures can occur, leaving some items unprocessed. In such cases, unprocessed items are returned, and you can retry them. This is something very important, and I will demonstrate this in the last section of this article!

Use batch writes when:

- Importing bulk data into a table.

- Cleaning up data by deleting multiple items.

- Performing high-throughput write operations.

When designing for batch writes, ensure your requests distribute evenly across partition keys to avoid throttling and maintain performance. Incorporating retries with exponential backoff helps handle transient failures effectively.

Here is the code of a minimal endpoint than can write multiple products to the database as a batch.

app.MapPost("/products/batch-write", async (List<CreateProductDto> productDtos, IDynamoDBContext context) =>{ var products = new List<Product>(); foreach (var product in productDtos) { products.Add(new Product(product.Name, product.Description, product.Price)); } var batchWrite = context.CreateBatchWrite<Product>(); batchWrite.AddPutItems(products); await batchWrite.ExecuteAsync();});This POST operation accepts a list of CreateProductDto. IDynamoDBContext is injected to the endpoint. First we transform CreateProductDto into Product list. And from there, we create a instance of BatchWrite and append the list of products to it.

Note that there is a hard limit of 25 items per batch. If your input has more than 25 products, you would have to change your code so that it splits the incoming lists into batches of 25 and individually sends the Batch requests over a loop.

Writing to Multiple DynamoDB Tables in a Batch

There are some edge cases, where you might have to write to multiple tables within a single transaction.

Let’s consider this scenario. Everytime you create a new product, you also want to create a new entry in the audits table. Here is how you would handle this requirement, in a rather efficient and performant manner.

app.MapPost("/products/batch-write-with-audits", async (List<CreateProductDto> productDtos, IDynamoDBContext context) =>{ var products = new List<Product>(); foreach (var product in productDtos) { products.Add(new Product(product.Name, product.Description, product.Price)); } var batchProductWrite = context.CreateBatchWrite<Product>(); batchProductWrite.AddPutItems(products);

var audits = new List<Audit>(); foreach (var product in products) { audits.Add(new Audit(product.Id, "create")); } var batchAuditWrite = context.CreateBatchWrite<Audit>(); batchAuditWrite.AddPutItems(audits); var batchWrites = batchProductWrite.Combine(batchAuditWrite);

await batchWrites.ExecuteAsync();});Same as earlier, we create seperate instances for BatchWrites, for both Products and Audits. Once you have appended the datasets to both the BatchWrites, you can simply use the Combine extension to link multiple write requests.

This way, you can execute multiple batch write operations in a single request, reducing the number of network calls and improving efficiency. In the above example, the process involves creating and saving both Product and Audit records together. This ensures that every product creation is logged with an associated audit entry.

This approach is particularly useful for applications that need to log user actions or maintain data integrity across multiple related tables.

DynamoDB Batch Read Operations

Batch read operations in DynamoDB enable fetching multiple items across one or more tables in a single request using the BatchGetItem API. This method is efficient for retrieving small to moderate amounts of data without making multiple network calls. Each request can handle up to 100 items or 16 MB of data, whichever is smaller.

Batch reads are ideal when you need to fetch related records, such as retrieving user profiles, product details, or audit logs for a set of IDs. However, it does not support conditional reads or strong consistency (only eventual consistency is available by default).

Use batch reads when:

- Fetching multiple items based on a list of keys.

- Minimizing latency for read-heavy applications.

- Reducing the number of API calls for bulk data retrieval.

When designing for batch reads, ensure the requested keys are evenly distributed across partitions to avoid throttling. If some items are not returned due to capacity limitations, they will appear in the UnprocessedKeys response. Implement retry logic to re-fetch these items.

Batch read operations are not transactional, so ensure your application can handle partial results gracefully. For high consistency, consider combining with Query or GetItem operations.

app.MapPost("/products/batch-get", async (IDynamoDBContext context, [FromBody] List<Guid> productIds) =>{ var batchGet = context.CreateBatchGet<Product>(); foreach (var id in productIds) { batchGet.AddKey(id); }

await batchGet.ExecuteAsync(); return Results.Ok(batchGet.Results);});The /products/batch-get endpoint in this example enables batch retrieval of multiple product records from DynamoDB. It accepts a list of product IDs in the request body as Guid values, representing the unique identifiers for the items in the DynamoDB table. By leveraging the CreateBatchGet method provided by the DynamoDB SDK, the application efficiently fetches all requested items in a single operation.

The logic starts by creating a BatchGet instance for the Product model. Each ID from the incoming list is added as a key to the batch operation using the AddKey method. Once all the keys are added, the ExecuteAsync method triggers the retrieval process. DynamoDB fetches all available items matching the provided keys in a single API call, which minimizes network overhead and improves performance compared to individual GetItem calls.

After execution, the batch operation populates its Results property with the retrieved products. These are then returned as part of an HTTP 200 OK response to the client. This approach is ideal for retrieving multiple records in applications where reducing API calls and improving read efficiency is critical.

DynamoDB Batch Delete Operations

DynamoDB batch delete operations allow you to remove multiple items from one or more tables in a single request using the BatchWriteItem API. This is particularly useful when cleaning up data, archiving records, or performing bulk deletions for housekeeping tasks. A single batch delete operation can handle up to 25 items or 16 MB of data, making it efficient for moderate-scale operations.

To perform a batch delete, you create a batch write request and add AddDeleteKey entries for each item to be deleted, specifying the partition and sort keys for each. Once all items are added, the batch operation is executed, and DynamoDB processes the deletions in parallel.

Batch delete operations are ideal for scenarios like bulk cleanup of test data, removing expired or unused records, or resetting specific tables. For critical operations, ensure your application can gracefully handle partial failures by implementing retries with exponential backoff. Also, avoid overloading partitions to prevent throttling.

app.MapPost("/products/batch-delete", async (IDynamoDBContext context, [FromBody] List<Guid> productIds) =>{ var batchDelete = context.CreateBatchWrite<Product>(); foreach (var id in productIds) { batchDelete.AddDeleteKey(id); } await batchDelete.ExecuteAsync();});The /products/batch-delete endpoint demonstrates how to efficiently remove multiple items from a DynamoDB table using a batch delete operation. It accepts a list of product IDs, represented as Guid values, in the request body. These IDs correspond to the primary keys of the items to be deleted from the Product table.

The operation begins by creating a BatchWrite instance for the Product model using DynamoDB’s context (IDynamoDBContext). For each Guid in the provided list, the AddDeleteKey method adds a delete request to the batch, specifying the partition key (Id) of the item to be removed. Once all keys are added, the ExecuteAsync method triggers the execution of the batch delete operation.

This method ensures that multiple delete actions are grouped into a single API call, significantly reducing the number of network requests and improving performance for bulk operations.

Fail Safe DynamoDB Batch Operations

While DynamoDB batch operations are highly efficient for bulk reads, writes, and deletes, they are not immune to transient errors or capacity-related throttling. These issues may arise due to unavailability of AWS resources, exceeding table or partition throughput, or malformed requests. To ensure your batch operations are fail-safe, it is crucial to implement strategies that handle such scenarios gracefully.

DynamoDB provides mechanisms like the UnprocessedItems response for batch write operations and UnprocessedKeys for batch reads. These indicate items that were not processed due to temporary limitations. You can implement retry logic with exponential backoff to reattempt these unprocessed items. This minimizes the risk of overloading DynamoDB while ensuring all items are eventually processed.

app.MapPost("/products/fail-safe-batch-write", async (List<CreateProductDto> productDtos, IAmazonDynamoDB context) =>{ var products = new List<Product>(); foreach (var product in productDtos) { products.Add(new Product(product.Name, product.Description, product.Price)); }

var request = new BatchWriteItemRequest { RequestItems = new Dictionary<string, List<WriteRequest>> { { "products", products.Select(p => new WriteRequest( new PutRequest(new Dictionary<string, AttributeValue> { { "id", new AttributeValue { S = p.Id.ToString() } }, { "name", new AttributeValue { S = p.Name } }, { "description", new AttributeValue { S = p.Description} }, { "price", new AttributeValue { N = $"{p.Price}"} }, }))) .ToList() } } };

var maxRetries = 5; var delay = 200; // Initial delay of 200ms

async Task RetryBatchWriteAsync(Dictionary<string, List<WriteRequest>> unprocessedItems) { var retryCount = 0; while (retryCount < maxRetries && unprocessedItems.Count > 0) { var retryRequest = new BatchWriteItemRequest { RequestItems = unprocessedItems };

var retryResponse = await context.BatchWriteItemAsync(retryRequest);

// Check if there are still unprocessed items unprocessedItems = retryResponse.UnprocessedItems;

if (unprocessedItems.Count == 0) { return; // Exit if no unprocessed items remain }

// Apply exponential backoff await Task.Delay(delay); delay *= 2; // Double the delay for each retry retryCount++; }

if (unprocessedItems.Count > 0) { throw new Exception("Max retry attempts exceeded. Some items were not processed."); } }

var response = await context.BatchWriteItemAsync(request);

if (response != null && response.UnprocessedItems.Count > 0) { // Retry unprocessed items with exponential backoff await RetryBatchWriteAsync(response.UnprocessedItems); }

return Results.Ok("Batch write operation completed.");});This code is implementing a fail-safe batch write operation in a .NET API for inserting multiple products into a DynamoDB table, while handling potential unprocessed items and retries with exponential backoff.

A BatchWriteItemRequest is constructed for DynamoDB, which includes a list of WriteRequest objects. Each WriteRequest is a PutRequest that contains the product details (ID, name, description, and price) formatted as AttributeValue objects for DynamoDB.

The BatchWriteItemAsync method is called to perform the batch write operation. If the request is successful but some items are not processed (due to DynamoDB throughput limits or other issues), those unprocessed items will be returned.

If there are unprocessed items, the RetryBatchWriteAsync method is invoked. This method retries the failed items, applying an exponential backoff strategy: the delay between each retry increases exponentially (e.g., 200ms, 400ms, 800ms, etc.). The process continues until all items are successfully written or the maximum retry count is reached (5 retries in this case).

If all items are successfully written, a success message is returned. If some items still remain unprocessed after the retries, an exception is thrown indicating that the maximum retry attempts have been exceeded.

This approach ensures that your batch write operation is robust, handles failures, and avoids losing data due to temporary issues.

I would recommended to adapt this approach to most of your external API calls to ensure that you system is robust and handles all fault tolerance.

The similar approach can be used on other DynamoDB operations as well!

Conclusion

In this guide, we learnt about handling Batch Operations with DynamoDB using .NET. We examined the code snippets of Batch Write, Read and Delete. We also looked into an ideal way to build a robust, and fail safe batch write operation with exponential backoff and retry mechanism. Complete source code is attached to the end of this article.

Do you use Amazon DynamoDB in your project workload? What’s the most challenging situation you have faced that was very specific to your product requirement? And how did you solve it? If you found this article helpful, I’d love to hear your thoughts! Feel free to share it on your social media to spread the knowledge. Thanks!