Let’s talk about Caching! We will go through the basics of Caching, In-Memory Caching in ASP.NET Core Applications, and so on. We will build a simple .NET 8 Web API that can help demonstrate setting and getting cache entries from the in-memory cache of the application. Caching can significantly improve your API’s performance, and also help save lots of costs if implemented effectively. Let’s get started.

What is Caching?

Caching is a technique of storing frequently accessed/used data in temporary storage so that future requests for those sets of data can be served much faster to the client.

Caching involves taking the most frequently accessed and least frequently modified data and copying it to temporary storage. This allows future client requests to access this data much faster, significantly boosting application performance by reducing unnecessary and frequent requests to the original data source (which may be time-consuming, or even might involve some costs).

Here’s a simplified example of how caching works: Let’s say, that when Client #1 requests certain data, it takes about 5 seconds to fetch it from the data store. As soon as the data is fetched, it’s also copied to a temporary storage. Now, when Client #2 requests the same data, it can be retrieved from the cache in well under 1-2 seconds. This reduces wait time and improves the overall efficiency of the application. Also, this time, instead of fetching from the data store, our application fetches the required data from the cache memory.

You might be wondering, what happens if the data changes? Will we still serve outdated responses? The answer is no. There are various strategies to refresh the cache and set expiration times to ensure the data remains up-to-date. We will discuss these techniques in detail later in the article.

It’s important to note that applications should not rely solely on cached data, and it should never be the source of truth for the application. They should use cached data only if it is available and up-to-date. If the cache data has expired or is unavailable, the application should request the data from the original source. This ensures data accuracy and reliability.

Caching in ASP.NET Core

ASP.NET Core offers excellent out-of-the-box support for various types of caching:

- In-Memory Caching: Data is cached within the server’s memory.

- Distributed Caching: Data is stored external to the application in sources like Redis cache.

- Hybrid Cache: This is an improvement over the Distributed Cache, to be introduced from .NET 9.

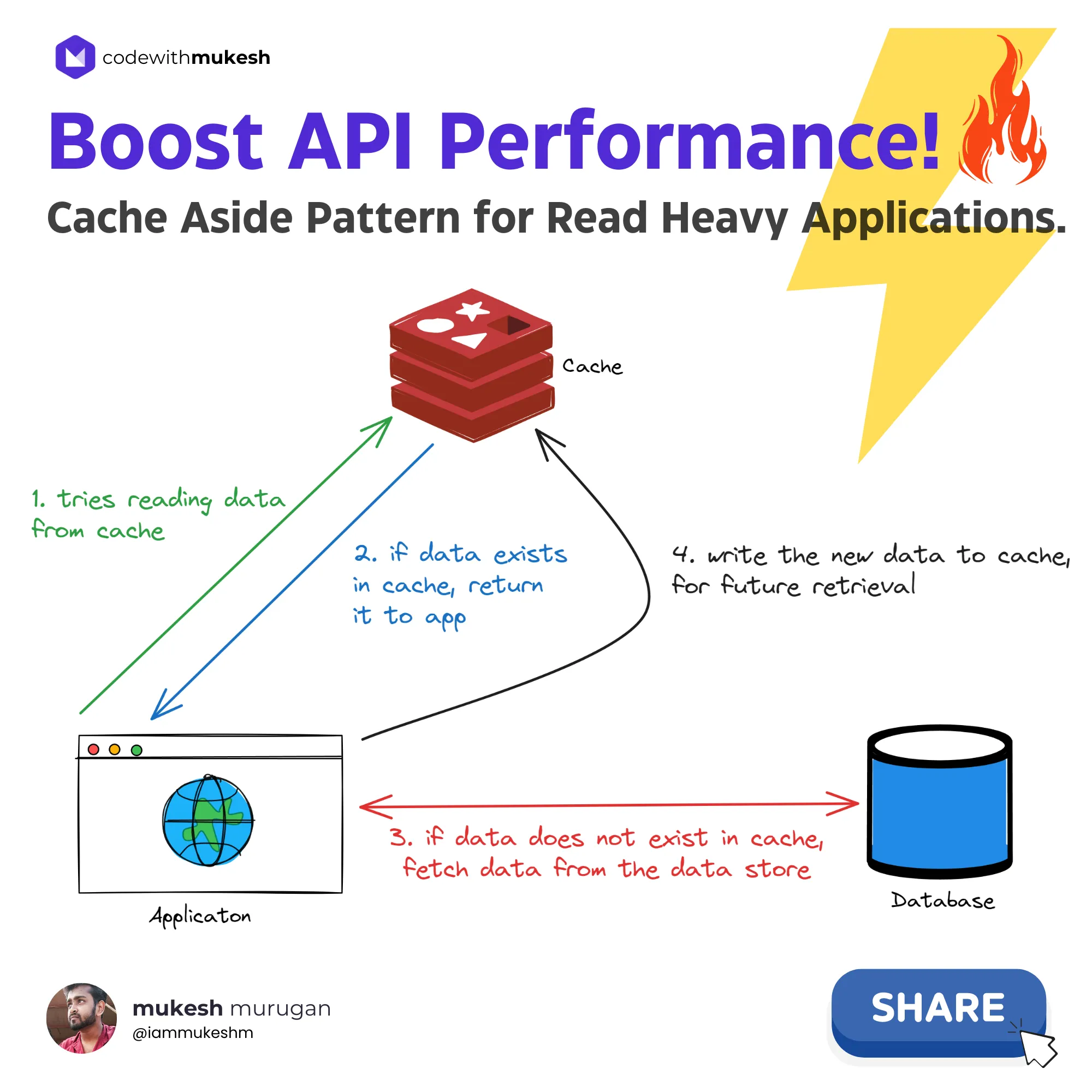

Here is a representation of how Caching Works.

Note that this specific mechanism is called the Cache Aside Pattern which is popularly used for Read-Heavy applications.

In this article, we will focus on In-Memory Caching in detail.

What is In-Memory Caching in ASP.NET Core?

In ASP.NET Core, In-Memory Caching allows you to store data within the application’s memory. This means the data is cached on the server’s instance, which significantly improves the application’s performance by reducing the need to repeatedly fetch the same data. In-memory caching is one of the simplest and most effective ways to implement caching in your application.

Pros and Cons of In-Memory Caching

Pros

- Much Quicker: It avoids network communication, making it faster than other forms of distributed caching.

- Highly Reliable: Data is stored directly in the server’s memory, ensuring quick access.

- Best for Small to Mid-Scale Applications: It provides efficient performance improvements for smaller applications.

Cons

- Resource Consumption: If not configured correctly, it can consume significant server resources.

- Scalability Issues: As the application scales and caching periods lengthen, maintaining the server can become costly.

- Cloud Deployment Challenges: Maintaining consistent caches across cloud deployments can be difficult.

- Not Suitable for Microservices with Multiple Replicas: In-memory caching may not be suitable for microservices architectures with multiple replicas running, as each replica would have its own cache, potentially leading to inconsistencies in cached data.

Getting Started

Setting up caching in ASP.NET Core is remarkably simple. With just a few lines of code, you can significantly enhance your application’s responsiveness, often by 50-75% or more.

Let’s create a new ASP.NET Core 8 Web API. I will be using Visual Studio 2022 Community Edition for this demonstration.

To enable in-memory caching, you first need to register the caching service in your application’s service container. We’ll then see how to use it with dependency injection.

Navigate to the Program.cs file, and add the following.

builder.Services.AddMemoryCache();This adds a non-distributed, in-memory implementation to the IServiceCollection of your application.

That’s it. Your Application now supports In-Memory Cache hosting. Now, to get a better understanding of how caching works, we will create a couple of CRUD Endpoints.

I will be using EF Core Code-First Approach, and interact with a PostgreSQL database hosted on my local machine.

Let’s install the required EF Core packages first.

Install-Package Microsoft.EntityFrameworkCoreInstall-Package Microsoft.EntityFrameworkCore.ToolsInstall-Package Npgsql.EntityFrameworkCore.PostgreSQLAs mentioned earlier, we will be building an ASP.NET Core 8 Web API that has endpoints to,

- Get All Products: Here, the list of products will be cached.

- Get a single Product: This too, will be cached.

- Add a Product: Upon this operation, the cached list of all products will be removed/invalidated.

Setting up the Product Models

As usual, we will have a rather simple Product Model.

public class Product{ public Guid Id { get; set; } public string Name { get; set; } = default!; public string Description { get; set; } = default!; public decimal Price { get; set; }

// Parameterless constructor for EF Core private Product() { }

public Product(string name, string description, decimal price) { Id = Guid.NewGuid(); Name = name; Description = description; Price = price; }}I have also added a Product Creation DTO that we will use in our ProductService class.

public record ProductCreationDto(string Name, string Description, decimal Price);I am not going to go step-by-step through setting up the DB Context class and generating & applying migrations. You can find the entire source code attached at the end of this article.

If you need to learn about setting up Entity Framework Core in ASP.NET Core, I recommend you to go through this article - Entity Framework Core in ASP.NET Core - Getting Started

However, here is the connection string I have used, to connect to my local PGSQL instance.

"ConnectionStrings": { "dotnetSeries": "Host=localhost;Database=dotnetSeries;Username=postgres;Password=admin;Include Error Detail=true"}I have also gone ahead, and generated 1000 fake records for Products, using the Mockaroo web app. I generated this data as an SQL Insert Script and ran it against my migrated database. Thus, we have 1,000 sample records available in our Products table. The SQL Script is included in the Scripts folder of the solution, in case you need it too.

Product Service - Using IMemoryCache

Here is the Product Service class, that does all the heavy lifting for us. Let’s go through the code method by method.

Note that I have injected the AppDbContext, IMemoryCache, and the ILogger<ProductService> instances to the primary constructor of the product service class, so that we can access them throughout the class.

1public class ProductService(AppDbContext context, IMemoryCache cache, ILogger<ProductService> logger) : IProductService2{3 public async Task Add(ProductCreationDto request)4 {5 var product = new Product(request.Name, request.Description, request.Price);6 await context.Products.AddAsync(product);7 await context.SaveChangesAsync();8 // invalidate cache for products, as new product is added9 var cacheKey = "products";10 logger.LogInformation("invalidating cache for key: {CacheKey} from cache.", cacheKey);11 cache.Remove(cacheKey);12 }13

14 public async Task<Product> Get(Guid id)15 {16 var cacheKey = $"product:{id}";17 logger.LogInformation("fetching data for key: {CacheKey} from cache.", cacheKey);18 if (!cache.TryGetValue(cacheKey, out Product? product))19 {20 logger.LogInformation("cache miss. fetching data for key: {CacheKey} from database.", cacheKey);21 product = await context.Products.FindAsync(id);22 var cacheOptions = new MemoryCacheEntryOptions()23 .SetSlidingExpiration(TimeSpan.FromSeconds(30))24 .SetAbsoluteExpiration(TimeSpan.FromSeconds(300))25 .SetPriority(CacheItemPriority.Normal);26 logger.LogInformation("setting data for key: {CacheKey} to cache.", cacheKey);27 cache.Set(cacheKey, product, cacheOptions);28 }29 else30 {31 logger.LogInformation("cache hit for key: {CacheKey}.", cacheKey);32 }33 return product;34 }35

36 public async Task<List<Product>> GetAll()37 {38 var cacheKey = "products";39 logger.LogInformation("fetching data for key: {CacheKey} from cache.", cacheKey);40 if (!cache.TryGetValue(cacheKey, out List<Product>? products))41 {42 logger.LogInformation("cache miss. fetching data for key: {CacheKey} from database.", cacheKey);43 products = await context.Products.ToListAsync();44 var cacheOptions = new MemoryCacheEntryOptions()45 .SetSlidingExpiration(TimeSpan.FromSeconds(30))46 .SetAbsoluteExpiration(TimeSpan.FromSeconds(300))47 .SetPriority(CacheItemPriority.NeverRemove)48 .SetSize(2048);49 logger.LogInformation("setting data for key: {CacheKey} to cache.", cacheKey);50 cache.Set(cacheKey, products, cacheOptions);51 }52 else53 {54 logger.LogInformation("cache hit for key: {CacheKey}.", cacheKey);55 }56 return products;57 }58}Get All Products

From lines #36 to #57, we have the method that returns a list of all products from the database/cache.

We first set a cache key, which in the current cases is products. This key will be used as an identifier to store the data in the cache. As you know, Cache is technically key-value pairs stored in data structures like a dictionary.

Next, in line #40, we first try to check if there is any data available in the cache against the key products. If some data exists, it will be fetched into List<Product>. Otherwise, the control goes into the if statement, and we will fetch the data from the database. Note that this is the case only when there is a cache miss, or in other words, only if the data doesn’t exist in our cache-store.

Once we obtain the response from the database, we set this data to our cache store against the cache key, and return it to the client.

The implementation is almost similar for the Get(Guid id) method as well, where we pass in the Product ID. However, the main difference is that this time around, we have a more dynamic cache which is product:{productId}, based on the requested Product ID.

Cache Entry Options in ASP.NET Core

Configuring the cache options allows you to customize the caching behavior as required.

var cacheOptions = new MemoryCacheEntryOptions() .SetAbsoluteExpiration(TimeSpan.FromMinutes(20)) .SetSlidingExpiration(TimeSpan.FromMinutes(2)) .SetPriority(CacheItemPriority.NeverRemove);MemoryCacheEntryOptions - This class is used to define the crucial properties of the concerned caching technique. We will be creating an instance of this class and passing it to the IMemoryCache instance. But before that, let us understand the properties of MemoryCacheEntryOptions.

When working with caching in ASP.NET Core, there are several settings you can configure to optimize performance and resource management. Here are some key settings and their purposes:

Priority (SetPriority())

- Description: Sets the priority of retaining the cache entry. This determines the likelihood of the entry being removed when the cache exceeds memory limits.

- Options:

Normal(default)HighLowNeverRemove

Size (SetSize())

- Description: Specifies the size of the cache entry. This helps prevent the cache from consuming excessive server resources.

Sliding Expiration (SetSlidingExpiration())

- Description: Sets a time interval during which the cache entry will expire if not accessed. In this example, a 2-minute sliding expiration means that if no one accesses the cache entry within 2 minutes, it will be removed.

- Usage:

cacheEntry.SetSlidingExpiration(TimeSpan.FromMinutes(2));

Absolute Expiration (SetAbsoluteExpiration())

- Description: Defines a fixed time after which the cache entry will expire, regardless of how often it is accessed. This prevents the cache from serving outdated data indefinitely.

- Best Practice: Always use both sliding and absolute expiration together. Ensure that the absolute expiration time is longer than the sliding expiration time to avoid conflicts.

- Usage:

cacheEntry.SetAbsoluteExpiration(TimeSpan.FromMinutes(20));

About In-Memory Caching in ASP.NET Core, it’s important to consider the Priority, Size, Sliding Expiration, and Absolute Expiration properties carefully while building your application.

Add Product - Cache Invalidation

Caching is not as simple as it sounds. While it can significantly improve application performance by reducing the need to fetch data repeatedly, managing the cache effectively involves ensuring that the cached data remains accurate and up-to-date. This is where cache invalidation comes into play.

Cache invalidation is crucial because it ensures that stale or outdated data does not persist in the cache, leading to inconsistencies and potentially erroneous behavior in your application.

Here is a scenario. What if the user creates a new product, and then tries to fetch the list of all products? There is a high probability that our application would serve stale data from before the product was created. When the underlying data changes, the cache must be updated to reflect these changes.

Strategies for Cache Invalidation

Time-Based Invalidation

- Sliding Expiration: The cache entry expires after a specified time if it has not been accessed. This is useful for less frequently accessed data.

- Absolute Expiration: The cache entry expires after a fixed time, regardless of access frequency. This ensures that data is refreshed periodically.

Dependency-Based Invalidation

- Data Dependencies: Cache entries are invalidated when the data they depend on changes. This can be implemented using event-based mechanisms or change notifications.

- Parent-Child Relationships: In scenarios where cached data is hierarchical, changes to parent data can trigger invalidation of child data.

Manual Invalidation

- Explicit Removal: Developers can explicitly remove cache entries when certain conditions are met or specific actions are performed. For example, after updating a database record, the corresponding cache entry can be removed or updated.

- Tagging: Assigning tags to cache entries allows for batch invalidation. For instance, all entries tagged with a specific label can be invalidated at once.

For our demonstration, we will be using the Manual Cache Invalidation. Whenever a new product is added, we call cache.Remove(cacheKey); ensuring that the list of products is removed from the cache.

You can further enhance this mechanism by updating the cache, instead of wiping it out completely. For instance, when a new product is created, you can get the list of products from the cache, append the newly created product to the list, and re-add the updated list to the cache memory again. This might increase the round-trips to and from the cache memory, but this can be efficient as well, based on your system design.

You can also run the entire cache invalidation process as a background task so that your application doesn’t have to wait for the cache invocations to complete, to send out the response

Minimal API Endpoints

Now that we have the required Service methods implemented, let’s wire the Product Service to actual API Endpoints in our .NET 8 Web API. Open up Program.cs, and add in the following Minimal API Endpoints.

app.MapGet("/products", async (IProductService service) =>{ var products = await service.GetAll(); return Results.Ok(products);});

app.MapGet("/products/{id:guid}", async (Guid id, IProductService service) =>{ var product = await service.Get(id); return Results.Ok(product);});

app.MapPost("/products", async (ProductCreationDto product, IProductService service) =>{ await service.Add(product); return Results.Created();});You might also have to register the IProductService service into the dependency injection (DI) container with Transient scope.

builder.Services.AddTransient<IProductService, ProductService>();Testing Caching in ASP.NET Core

Let’s build and run our application. I will be using Postman to test our implementation.

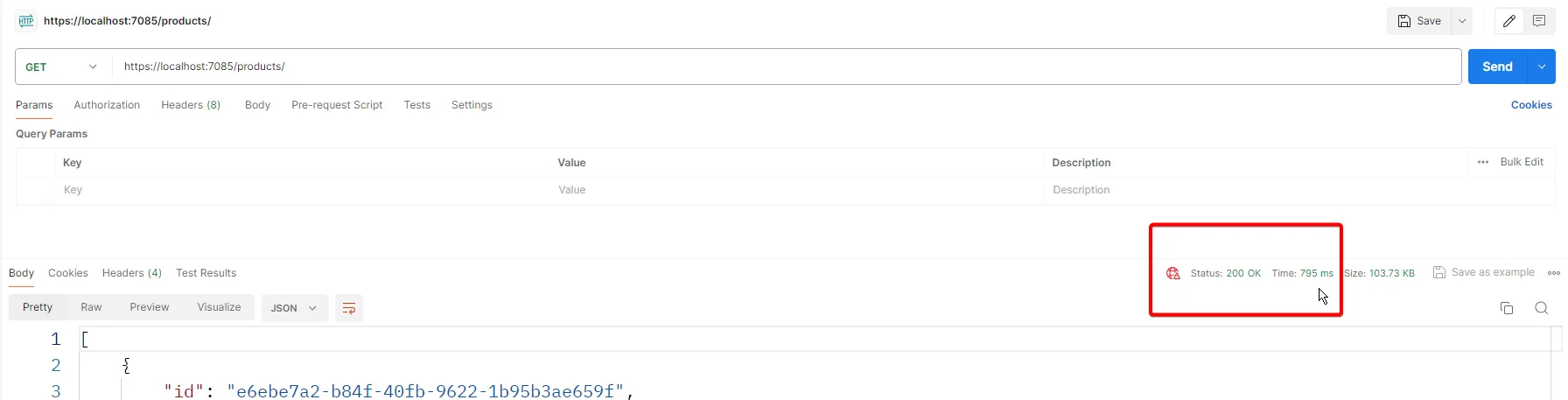

First, let’s hit the GetAll endpoint. Since there is no data in the cache, we expect it to be fetched from the database.

As you see, the response time is close to 800ms, to return a list of 1000 products. Ideally, the list of products should be cached, and if we hit the endpoint again, we expect it to be fetched from the cache instead.

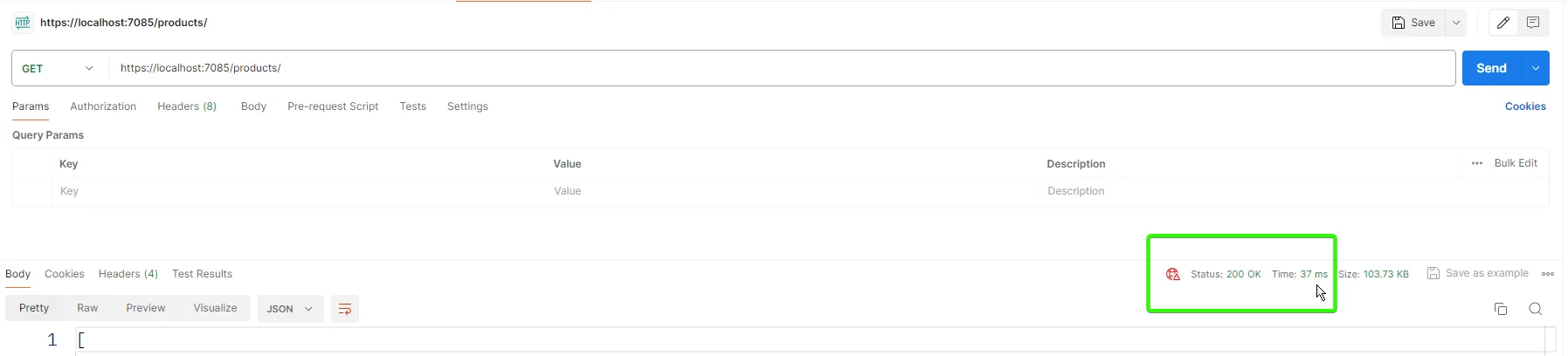

Let’s hit it again.

Now, the response times have significantly dropped, to under 40ms!

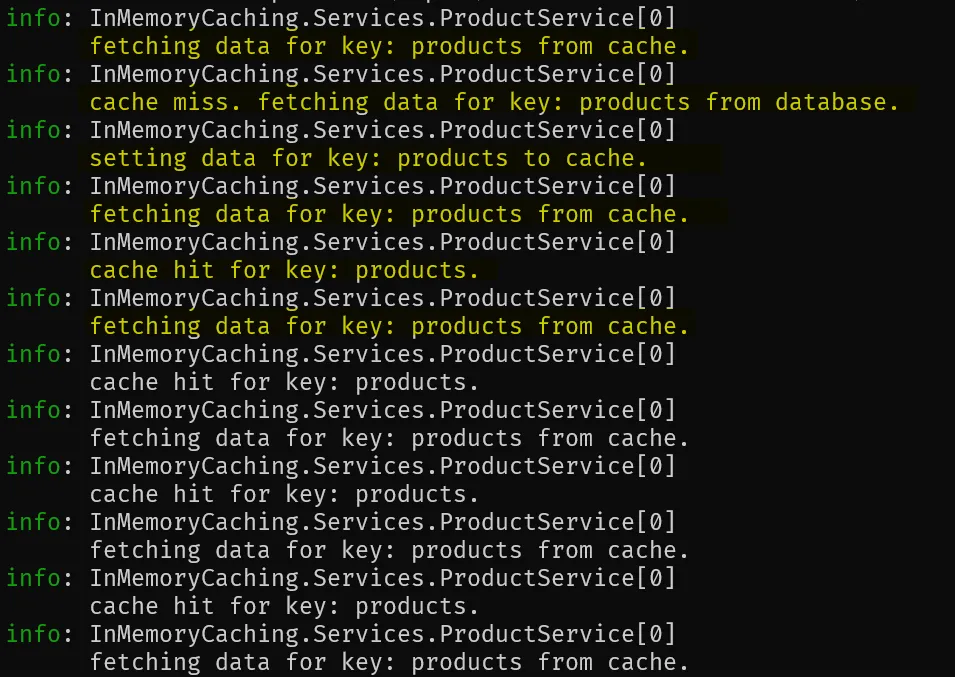

The logs are exactly as we expected it to be.

Points to Remember

Here are some important points to consider while implementing caching.

- Your application should never depend on the Cached data as it is highly probable to be unavailable at any given time. Traditionally it should depend on your actual data source. Caching is just an enhancement that is to be used only if it is available/valid.

- Try to restrict the growth of the cache in memory. This is crucial as Caching may take up your server resources if not configured properly. You can use the Size property to limit the cache used for entries.

- Use Absolute Expiration / Sliding Expiration to make your application much faster and smarter. It also helps restrict cache memory usage.

Background cache update

Additionally, as an improvement, you can use Background Jobs to update the cache at regular intervals. If the Absolute Cache Expiration is set to 5 minutes, you can run a recurring job every 6 minutes to update the cache entry to its latest version. You can use Hangfire to achieve the same in ASP.NET Core Applications.

Let’s wind up this article here.

For the next article, we will discuss more advanced concepts of Caching Like Distributed Caching, Setting up Redis, Redis Caching, PostBack Calls, and much more.

Summary

In this detailed article, we’ve explored various aspects of caching, including the basics, in-memory caching, and how to implement in-memory caching in ASP.NET Core. We also provided a practical implementation example. You can find the complete source code here.

I hope you found this article informative and gained new insights into caching strategies. If you have any questions, comments, or suggestions, please share them in the comment section below. Your feedback is invaluable and helps us improve.

Don’t forget to share this article with your developer community to spread the knowledge. Thank you for reading, and happy coding!