Modern applications often need to handle image uploads — whether it’s for user profiles, product galleries, or content feeds. But building a scalable, reliable, and cost-effective image processing pipeline can quickly turn into a mess of infrastructure, background jobs, and maintenance overhead.

This is where serverless architecture on AWS shines.

In this article, we’ll walk through how you, as a .NET developer, can build a fully serverless image processing pipeline using AWS services like S3, SQS, and Lambda — all while writing your core processing logic in .NET.

The entire source code of this implementation is available at the end of this article, in a GitHub repository.

You’ll learn how to:

- Automatically trigger image processing on upload

- Generate thumbnails using the Sharp library

- Leverage AWS services without managing servers

- Make the whole system scalable, resilient, and production-ready

What We’ll Build?

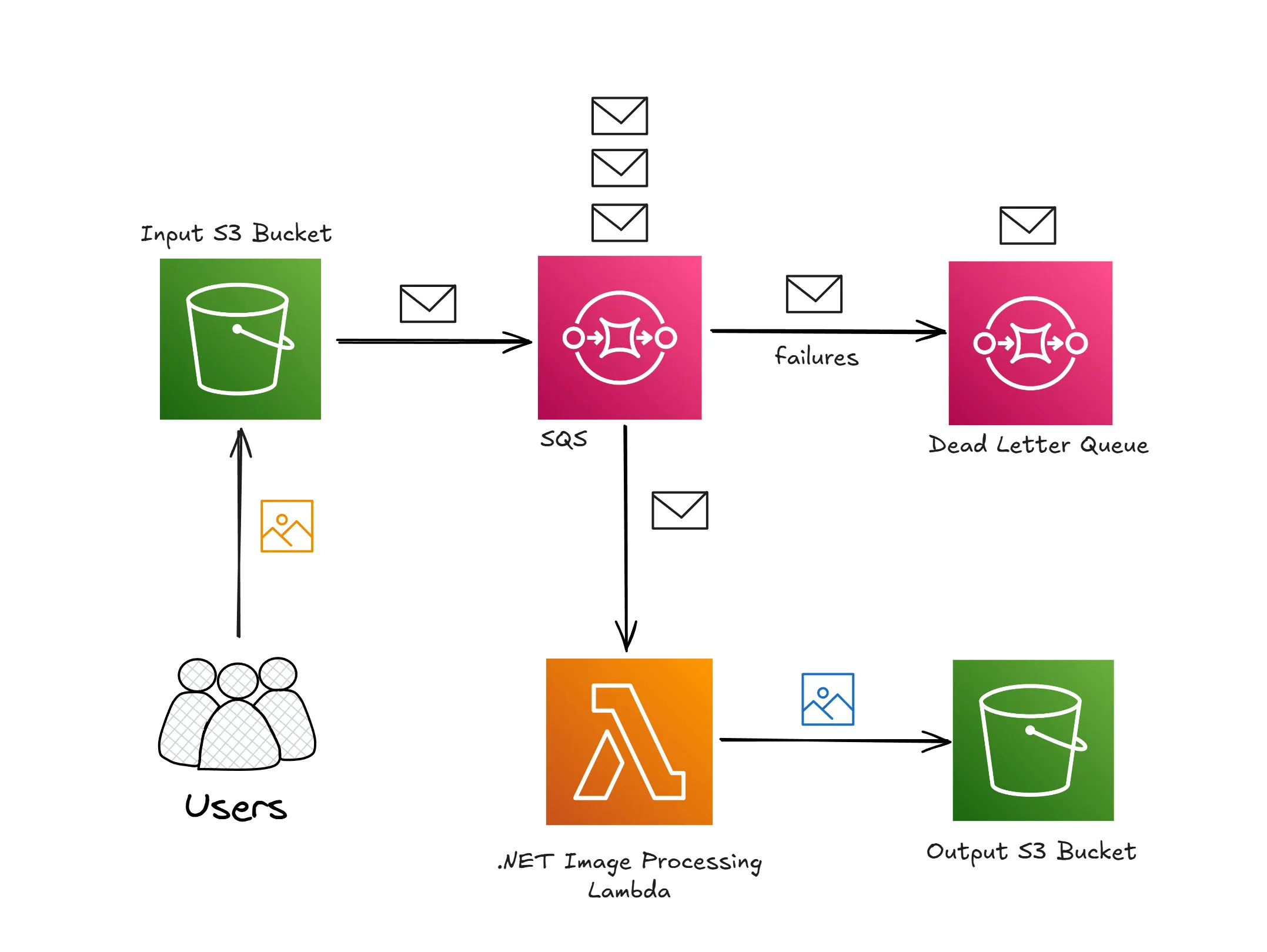

We will develop a serverless image processing pipeline using AWS services and .NET. The goal is to create an automated workflow that processes images immediately after they are uploaded by a user.

When a user uploads an image to an Amazon S3 bucket, the event triggers a message to be sent to an Amazon SQS queue. A .NET-based AWS Lambda function is configured to respond to these messages. It retrieves the image from the S3 input bucket, processes it using the Sharp image library to generate a thumbnail, and then uploads the resulting image to a separate output S3 bucket.

We will also be building a lightweight .NET 9 Web API with Minimal API endpoints to be able to upload images to the S3 Bucket. The file validation will be handled by this endpoint. It will ensure that only images get uplaoded to this S3 Bucket.

This architecture is entirely serverless, which means there are no servers to manage or scale manually. It is designed to be highly scalable, cost-effective, and resilient to failures. The use of SQS ensures that image processing jobs are decoupled from the upload process, while a dead-letter queue (DLQ) captures any failed messages for later inspection, ensuring reliability and traceability.

PreRequisites

As usual, here are the prequisites that you may need for this implementation.

- An AWS Free Tier Account.

- AWS Authenticated Dev Machine - Here’s how to use AWS CLI to authenticate your machine into AWS

- Visual Studio IDE

- .NET 9 SDK

- Good understanding on AWS Lambda, AWS S3, AWS SQS and Dead Letter Queues. I have written an article for each of these awesome services. It’s recommended that you are well aware of these services before building this system.

Core Components

This serverless image processing pipeline is built using a set of managed AWS services, each fulfilling a specific role in the workflow. Together, they create a decoupled, scalable, and resilient architecture that minimizes operational overhead and simplifies maintenance.

At the center of the system is Amazon S3, which serves as both the entry point and the storage layer. When a user uploads an image to the designated input bucket, S3 generates an event notification. This event is configured to trigger a message to an Amazon SQS queue. By using SQS as an intermediary, we ensure that the upload and processing stages are decoupled. This makes the system more reliable and better equipped to handle spikes in traffic without overloading the downstream processing function.

The core processing is handled by an AWS Lambda function written in .NET. This function listens for new messages in the SQS queue. When triggered, it downloads the image from the input bucket, processes it using the Sharp library to generate a thumbnail, and stores the result in a separate output S3 bucket. This processing logic is entirely serverless and scales automatically based on the number of incoming messages.

To improve fault tolerance, the SQS queue is configured with a dead-letter queue (DLQ). Any message that fails to be processed after a certain number of retries is moved to the DLQ, allowing for post-mortem analysis without data loss.

Each of these components—S3, SQS, Lambda, and the DLQ—plays a vital role in building a robust, event-driven image processing system tailored for .NET developers on AWS.

High Level Architecture - Workflow Explained

The architecture of this serverless image processing system follows an event-driven, decoupled design using core AWS services. It is optimized for scalability, fault tolerance, and minimal operational overhead—making it ideal for .NET developers who want to build cloud-native solutions without managing infrastructure.

The workflow begins when a user uploads an image to the S3 input bucket. This event triggers a message to be sent to an Amazon SQS queue via a pre-configured S3 event notification. The SQS queue serves as a buffer, ensuring that image uploads are decoupled from downstream processing and can be handled at scale, even during traffic spikes.

An AWS Lambda function, implemented in .NET, is subscribed to this queue. It is automatically invoked when a new message arrives. The function retrieves the uploaded image from the input bucket, processes it using the Sharp library to create a thumbnail, and uploads the processed image to the output S3 bucket. If the function fails to process a message after the defined retry attempts, the message is moved to a dead-letter queue (DLQ) for future inspection.

This architecture not only simplifies asynchronous image processing but also ensures robustness through built-in retry mechanisms and failure isolation using DLQ.

With that said, Let’s get started with the actual implementation!

Setting up the Input S3 Bucket

The first step in our image processing pipeline is to create the S3 bucket where users will upload their original images. This bucket acts as the entry point to the workflow and must be configured to send event notifications when new objects are added.

Begin by creating a new S3 bucket using the AWS Management Console, AWS CLI, or Infrastructure as Code (e.g., CloudFormation or CDK). Name the bucket something descriptive and globally unique. I named mine as cwm-image-input.

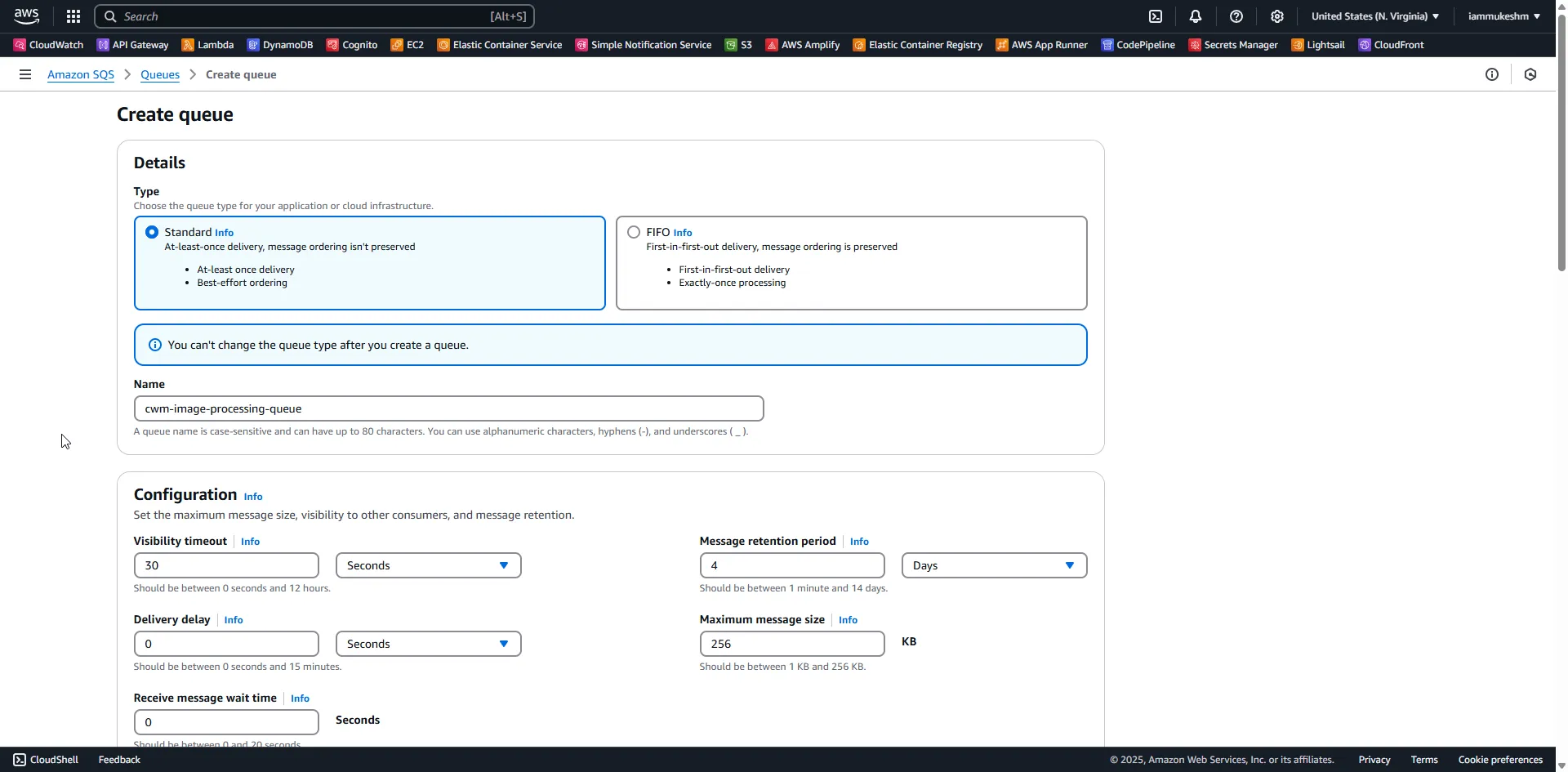

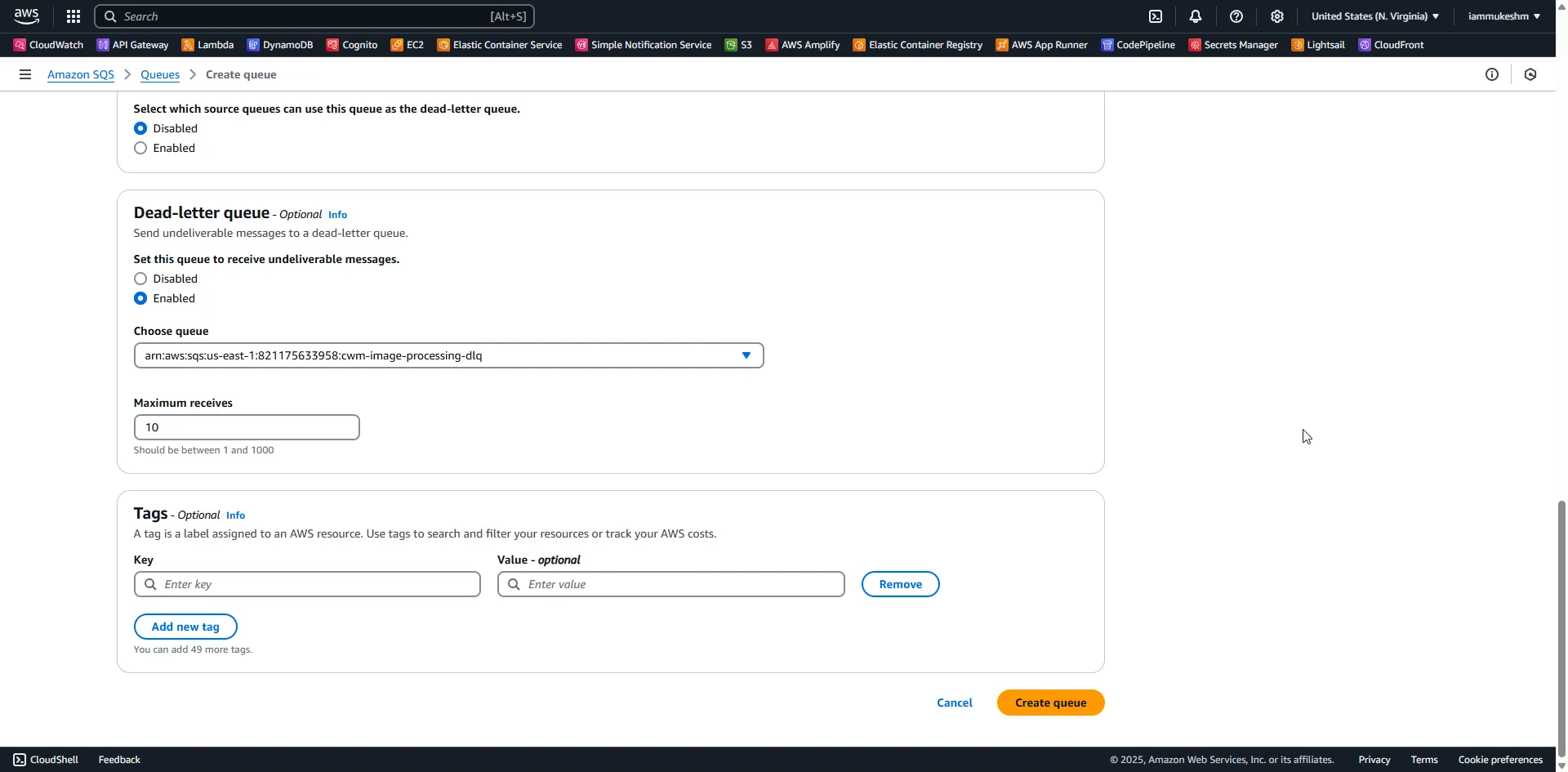

Creating the SQS Queue with DLQ

Now we need to create an SQS queue that will receive event notifications from the S3 input bucket. This queue serves as a buffer between the image upload and the processing logic, allowing the system to handle traffic bursts and decouple the upload from the compute layer.

Start by creating a standard SQS queue—for example, cwm-image-processing-queue. This queue will receive messages each time an image is uploaded to the S3 input bucket. Make sure to configure access policies so that the S3 service (s3.amazonaws.com) is allowed to send messages to this queue.

Once your main queue is created, open it up, and navigate to the Access Policies tab, and add in the following to make sure that your S3 Bucket will be able to push messages into this Queue.

{ "Version": "2012-10-17", "Id": "__default_policy_ID", "Statement": [ { "Sid": "__owner_statement", "Effect": "Allow", "Principal": { "AWS": "arn:aws:iam::<your aws account number>:root" }, "Action": "SQS:*", "Resource": "arn:aws:sqs:us-east-1:<your aws account number>:cwm-image-processing-queue" }, { "Sid": "AllowS3ToSendMessage", "Effect": "Allow", "Principal": { "Service": "s3.amazonaws.com" }, "Action": "sqs:SendMessage", "Resource": "arn:aws:sqs:us-east-1:<your aws account number>:cwm-image-processing-queue", "Condition": { "ArnEquals": { "aws:SourceArn": "arn:aws:s3:::cwm-image-input" } } } ]}To improve fault tolerance, configure a Dead Letter Queue (DLQ)—for example, cwm-image-processing-dlq. The DLQ captures messages that could not be successfully processed after a configured number of retries. This allows you to isolate and inspect problematic events without affecting the rest of the system.

While setting up the DLQ:

- Set a reasonable

Maximum receives, which defines how many times a message can be retried before it’s sent to the DLQ. - Apply necessary IAM permissions for Lambda to poll and delete messages from the main queue.

- Optionally, configure CloudWatch Alarms on the DLQ to get notified when failures occur.

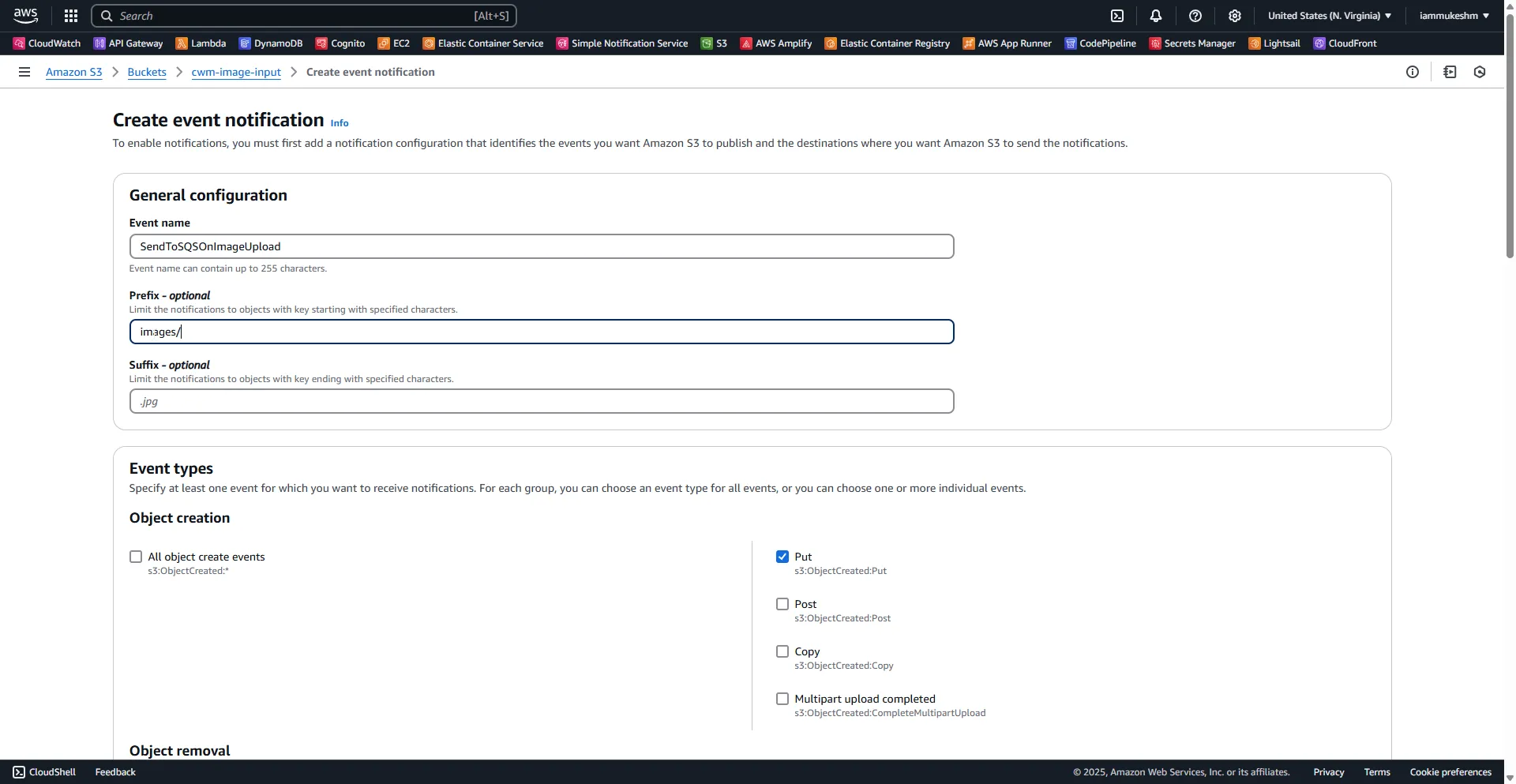

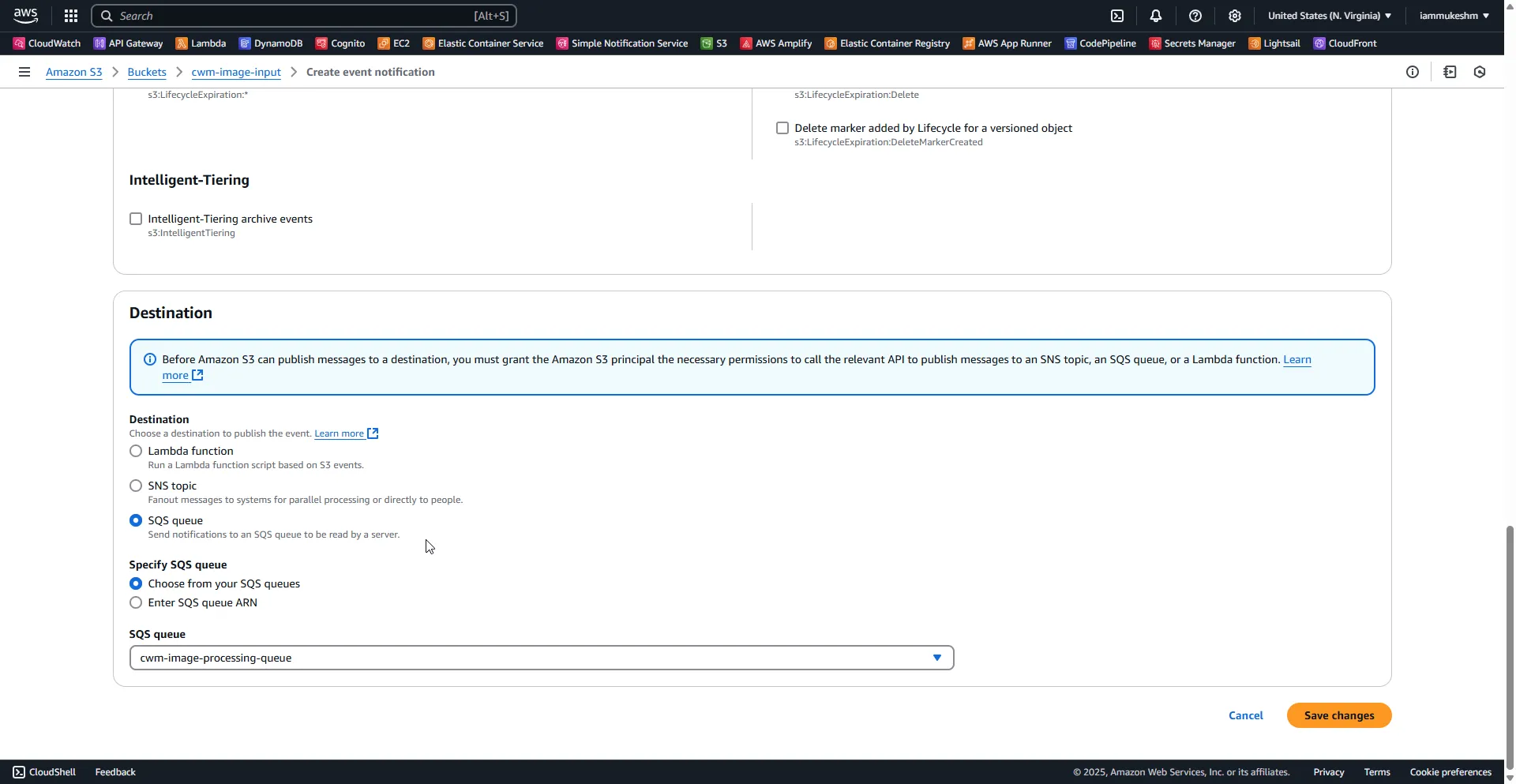

Configuring Input S3 Bucket to Push Messages to SQS

With both the input S3 bucket and the SQS queue set up, the next step is to configure the S3 bucket to send event notifications to the queue whenever a new image is uploaded. This configuration enables automatic triggering of the image processing pipeline as soon as an object is created in the input bucket.

Start by opening the S3 console and navigating to your input bucket (e.g., cwm-image-input). Go to the “Properties” tab, scroll down to the “Event notifications” section, and create a new event notification.

Give the event a descriptive name such as SendToSQSOnImageUpload. Under the event type, select “PUT”, which corresponds to object creation. This ensures that the notification is only triggered when a new file is uploaded, not when files are deleted or overwritten.

Next, set the destination to SQS queue, and select the queue you previously created (e.g., cwm-image-processing-queue). If you do not see the queue listed, ensure that the correct permissions are in place—specifically, that the SQS queue’s access policy allows the s3.amazonaws.com service to send messages, with the aws:SourceArn condition matching the input bucket.

Optionally, you can filter notifications to only trigger for certain file types (e.g., .jpg, .png) by setting a prefix or suffix filter. This can help avoid unnecessary processing of unsupported or irrelevant files.

After saving the configuration, every time a user uploads an image to the input bucket, a corresponding message will be pushed to the SQS queue—containing metadata such as the object key, bucket name, and event type.

This completes the event-driven link between S3 and SQS and sets the stage for the Lambda function to begin processing images.

With the input S3 bucket and the SQS queue (with DLQ) in place, the foundation of your event-driven pipeline is ready.

Next, we’ll set up the follwing,

- .NET 9 Web API to upload the images into the Input S3 Bucket.

- Lambda function to process these messages and generate thumbnails.

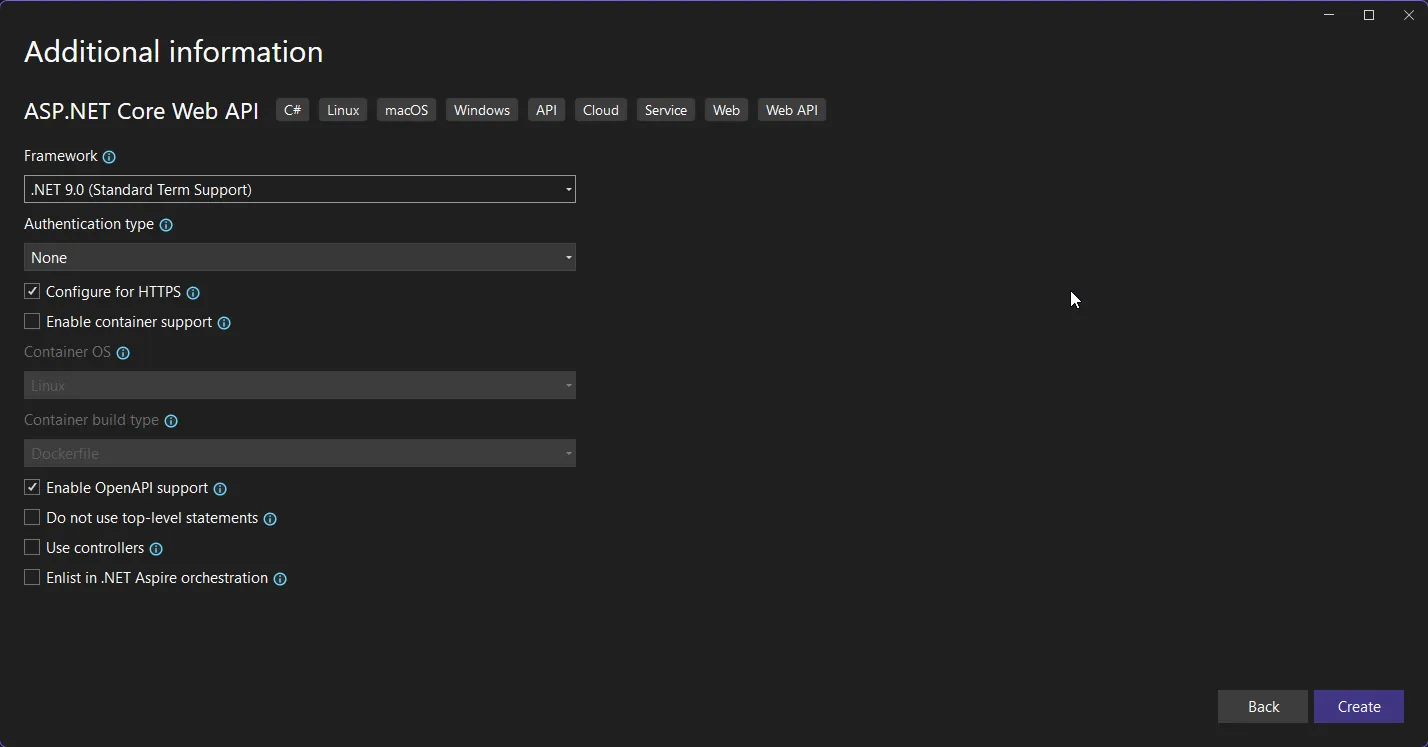

.NET 9 Web API to Upload Images to S3 Bucket

First up, Create a New Solution in Visual Studio IDE. I named my solution as ServerlessImageProcessor, and create a new .NET 9 Web API project with the name as ImageUploader.API.

Let’s install the required AWS SDK packages.

Install-Package AWSSDK.Extensions.NETCore.SetupInstall-Package AWSSDK.S3And here is the code required to upload incoming files on to our S3 Bucket.

using Amazon.S3;using Amazon.S3.Model;

var builder = WebApplication.CreateBuilder(args);builder.Services.AddAWSService<IAmazonS3>();var app = builder.Build();

var bucketName = "cwm-image-input"; // Replace with your actual bucket name

app.MapPost("/upload", async (HttpRequest request, IAmazonS3 s3Client) =>{ var form = await request.ReadFormAsync(); var files = form.Files;

if (files == null || files.Count == 0) return Results.BadRequest("No files uploaded.");

var allowedTypes = new[] { "image/jpeg", "image/png", "image/webp", "image/gif" }; var uploadTasks = new List<Task>(); var uploadedKeys = new List<string>();

foreach (var file in files) { if (!allowedTypes.Contains(file.ContentType)) continue;

var key = $"images/{Guid.NewGuid()}_{file.FileName}"; uploadedKeys.Add(key);

var stream = file.OpenReadStream();

var s3Request = new PutObjectRequest { BucketName = bucketName, Key = key, InputStream = stream, ContentType = file.ContentType };

// Start the upload task uploadTasks.Add(s3Client.PutObjectAsync(s3Request)); }

await Task.WhenAll(uploadTasks);

return Results.Ok(new { UploadedFiles = uploadedKeys });});

app.UseHttpsRedirection();app.Run();The line builder.Services.AddAWSService<IAmazonS3>() is critical because it registers the Amazon S3 client with dependency injection, allowing it to be injected into your endpoint handler automatically.

The route app.MapPost("/upload", async (HttpRequest request, IAmazonS3 s3Client) => defines the POST endpoint and injects both the HTTP request and the S3 client. This is where the image upload logic begins.

var form = await request.ReadFormAsync(); reads the incoming multipart/form-data payload and gives access to uploaded files.

The allowedTypes array filters out non-image uploads by checking each file’s MIME type. This is important to ensure the endpoint only processes images.

var key = $"images/{Guid.NewGuid()}_{file.FileName}"; creates a unique object key under the images/ prefix to prevent filename collisions in S3 and simulate folder structure. Remember this is the folder (prefix) we added while configuring event notification.

The PutObjectRequest object holds the metadata for the S3 upload, including the bucket name, file key, stream, and content type. This is the core of the S3 upload operation.

Each upload is started asynchronously using s3Client.PutObjectAsync(s3Request), and the tasks are collected.

Finally, await Task.WhenAll(uploadTasks); ensures all uploads finish before returning the response, making this an efficient batch upload handler.

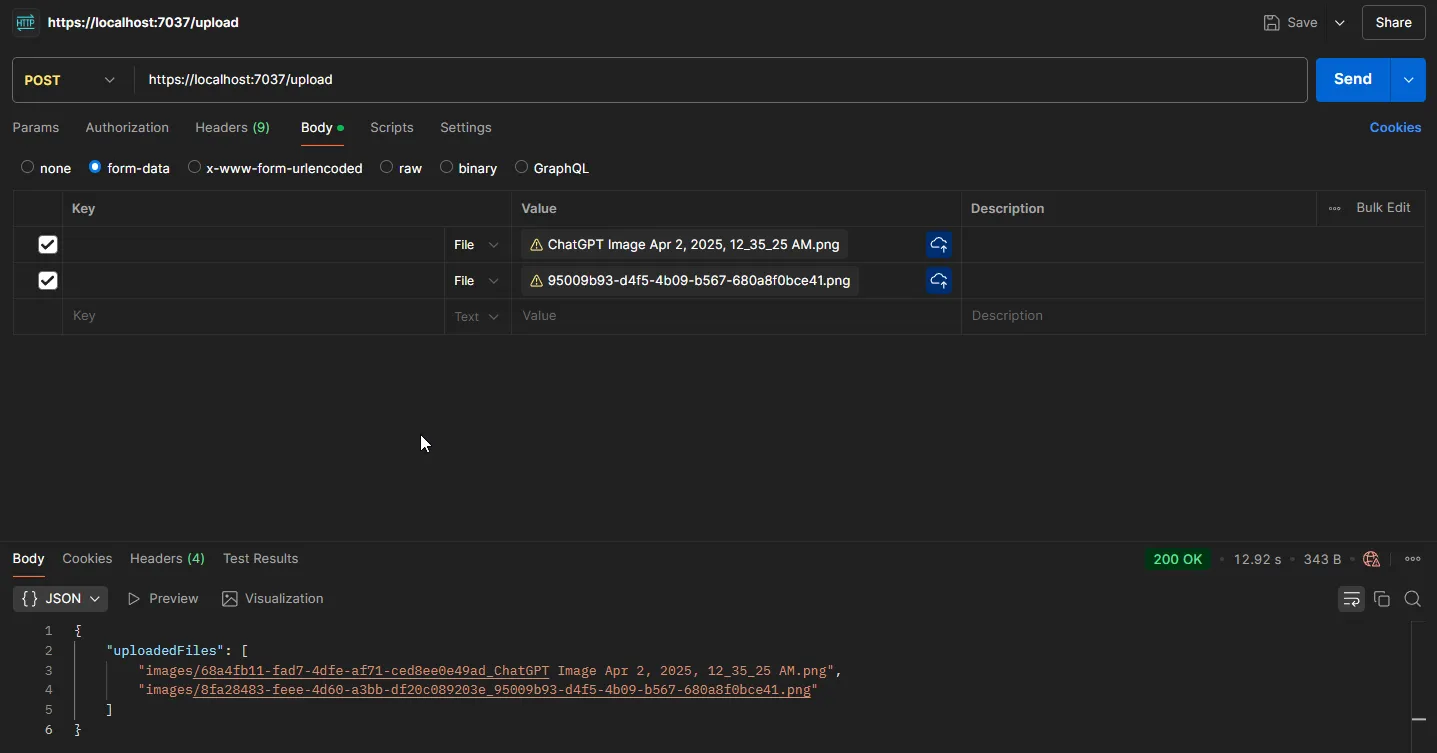

I was able to test this API locally by sending a request via Postman.

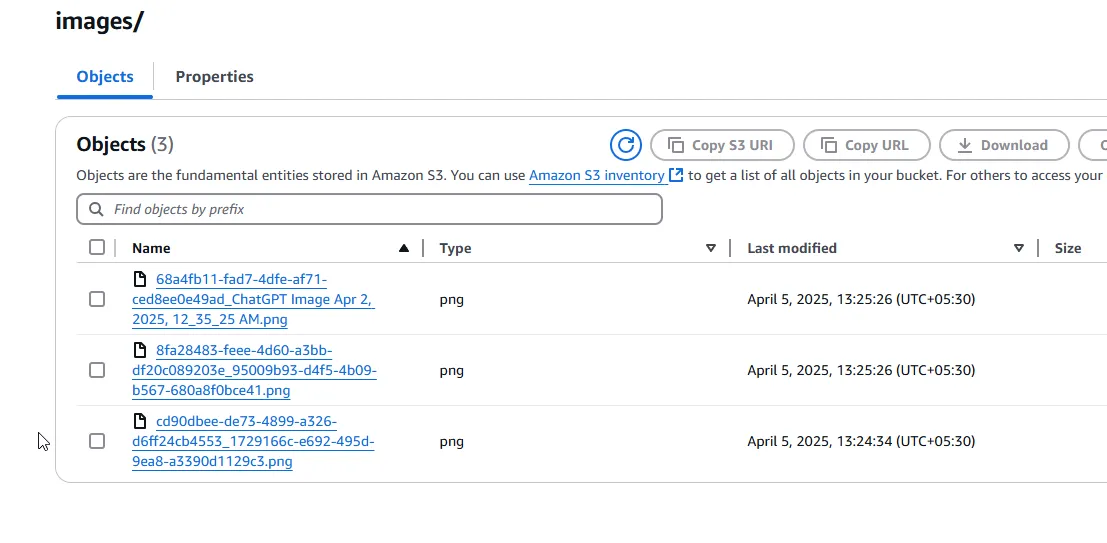

The images are uploaded to the /images folder as expected.

Output S3 Bucket Creation

Let’s quickly create another S3 bucket where we will be uploading our final processed image. You can also choose to upload the image to the same S3 bucket if you wanted to.

I created a simple new S3 bucket named cwm-image-output.

Image Processing .NET Lambda with SixLabors

With image uploads now flowing into the S3 input bucket and events being pushed to SQS, the next step is to process those images using a .NET-based AWS Lambda function. For image manipulation, we’ll use the SixLabors.ImageSharp library — a high-performance, fully managed image processing library for .NET.

This Lambda function will be triggered by messages from the SQS queue. When invoked, it will extract the image metadata from the message, download the original image from the input S3 bucket, resize it to a thumbnail using ImageSharp, and then upload the processed image to the output S3 bucket under a designated folder (e.g., thumbnails/).

Under the same solution on Visual Studio, create a new .NET Lambda Project. I named mine as ImageProcessor.

using Amazon.Lambda.Core;using Amazon.Lambda.S3Events;using Amazon.Lambda.SQSEvents;using Amazon.S3;using Amazon.S3.Model;using SixLabors.ImageSharp;using SixLabors.ImageSharp.Processing;using System.Text.Json;

// Assembly attribute to enable the Lambda function's JSON input to be converted into a .NET class.[assembly: LambdaSerializer(typeof(Amazon.Lambda.Serialization.SystemTextJson.DefaultLambdaJsonSerializer))]

namespace ImageProcessor;

public class Function{ private readonly IAmazonS3 _s3Client; private const string OutputBucket = "cwm-image-output";

public Function() => _s3Client = new AmazonS3Client();

public async Task FunctionHandler(SQSEvent sqsEvent, ILambdaContext context) { // Pretty-print the full SQS event var fullEventJson = JsonSerializer.Serialize( sqsEvent, new JsonSerializerOptions { WriteIndented = true } );

context.Logger.LogInformation("Full SQS Event:"); context.Logger.LogInformation(fullEventJson);

foreach (var sqsRecord in sqsEvent.Records) { try { // Log raw SQS body context.Logger.LogInformation("Raw SQS Message Body:"); context.Logger.LogInformation(sqsRecord.Body);

var s3Event = JsonSerializer.Deserialize<S3Event>(sqsRecord.Body, new JsonSerializerOptions { PropertyNameCaseInsensitive = true }); if (s3Event == null) continue;

// Log parsed S3 event structure context.Logger.LogInformation("Parsed S3 Event JSON:"); var s3EventJson = JsonSerializer.Serialize(s3Event, new JsonSerializerOptions { WriteIndented = true }); context.Logger.LogInformation(s3EventJson);

foreach (var record in s3Event.Records) { var inputBucket = record.S3.Bucket.Name; var objectKey = Uri.UnescapeDataString(record.S3.Object.Key.Replace('+', ' '));

context.Logger.LogInformation($"Processing: {inputBucket}/{objectKey}");

using var originalImageStream = await _s3Client.GetObjectStreamAsync(inputBucket, objectKey, null);

using var image = await Image.LoadAsync(originalImageStream); image.Mutate(x => x.Resize(new ResizeOptions { Mode = ResizeMode.Max, Size = new Size(300, 0) }));

using var outputStream = new MemoryStream(); await image.SaveAsJpegAsync(outputStream); outputStream.Position = 0;

var outputKey = $"thumbnails/{Path.GetFileName(objectKey)}";

var putRequest = new PutObjectRequest { BucketName = OutputBucket, Key = outputKey, InputStream = outputStream, ContentType = "image/jpeg" };

await _s3Client.PutObjectAsync(putRequest); context.Logger.LogInformation($"Successfully processed: {objectKey}"); } } catch (Exception ex) { context.Logger.LogError($"Error processing record: {ex.Message}"); throw; } } }}This AWS Lambda function is triggered by SQS messages, each containing S3 event notifications for uploaded images. It uses the AWS SDK to download the original image from S3, resizes it using ImageSharp, and uploads a thumbnail to a separate output bucket.

At startup, the function initializes an IAmazonS3 client once to reuse across invocations. It logs the full SQS event and each message body for traceability.

Each SQS message is deserialized into an S3Event object using System.Text.Json with case-insensitive matching. Since each message can contain multiple S3 records, the function loops through all of them.

For each S3 record, it extracts the bucket name and object key, unescapes the key (to handle URL encoding), and logs the filename being processed.

The image is downloaded from S3, resized to a 300px max width using ImageSharp, and written to a memory stream. The resized image is then uploaded to the output S3 bucket under a thumbnails/ prefix, maintaining the original filename. I have selected 300px for demonstration purposes only. Feel free to play around. Also note that the Aspect Ratio of the image is maintained.

If any part of the process fails, the error is logged, and the exception is rethrown so AWS can retry or send the message to a DLQ.

This function efficiently handles real-time image processing in a serverless, event-driven architecture without managing any infrastructure.

I had to do a lot of debugging to get the entire setup working, especially with the Lambda. The major pain point was deserialization. I found that it makes the entire debugging journey easier if you print the sqs and s3 events to the console. I then used the sqs event content to debug the lambda in my local machine using the Lambda Mock Tool.

Once done, you can publish this to AWS Lambda by right clicking on the project in Visual Studio IDE and hitting the Publish to AWS Lambda option. Here you can give a name to your Lambda, select it’s roles, adjust configuration and deploy to AWS.

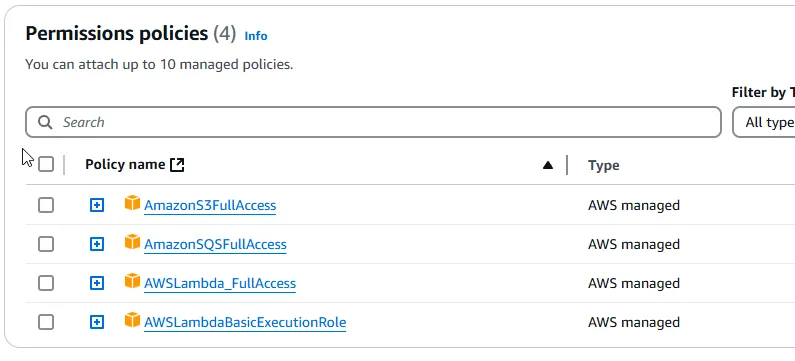

Adding the Required Permissions to AWS Lambda

When the Lambda is deployed, navigate to AWS Management Console, and open up Lambda. Let’s give the Lambda it’s required permissions.

Make sure that the Execution Role attached to your lambda has the below mentioned policies.

- S3 Access to read from the input bucket, and write the thumbnail to the output bucket.

- SQS Access to poll from the SQS Queue.

- Basic Exection Role for Cloudwatch Logs Access.

IMPORTANT: Since this is just for a demonstration, I have added FullAccess permissions to most of the policies. You do not want to do this in Production Environments. Be cautious about the permissions you attach to your Lambda.

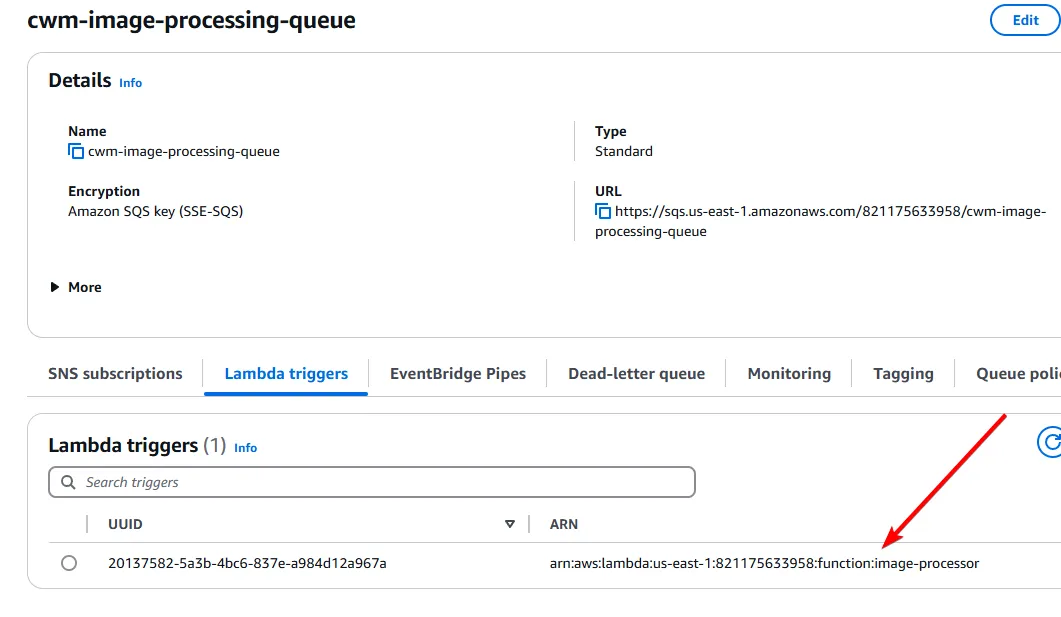

Connecting SQS to Lambda

Once the S3 bucket is configured to send upload events to an SQS queue, and the AWS Lambda is deployed, the next step is to connect that queue to our shiny new AWS Lambda function. This integration allows messages in the queue to automatically trigger the function without the need for polling or manual invocation.

In the AWS Console, you can configure this by adding the Lambda function as a trigger on the SQS queue. Under the queue’s (cwm-image-processing-queue) “Lambda triggers” section, select your Lambda function and define the batch size — typically between 1 and 10 for image processing workloads. This determines how many messages are sent to the function in a single batch invocation.

The Lambda service automatically handles polling the queue, batching messages, and invoking the function when new messages arrive. It also takes care of scaling based on the number of messages in the queue, retrying failed batches, and routing them to a Dead Letter Queue (DLQ) if configured.

To complete the setup, make sure the Lambda execution role has permission to read from the SQS queue (sqs:ReceiveMessage, sqs:DeleteMessage, and sqs:GetQueueAttributes). You must have already added this in the previous section of this article. Once connected, the pipeline is fully event-driven: an image upload triggers an SQS message, which invokes the Lambda function to process the image.

Testing & Monitoring

Since we have already built an API that is capable of uploading images to the Input S3 Bucket, let’s use it for our testing. Spin up the API and post an image to the /upload endpoint.

I have a uploaded an image that is about 2Mb in filesize.

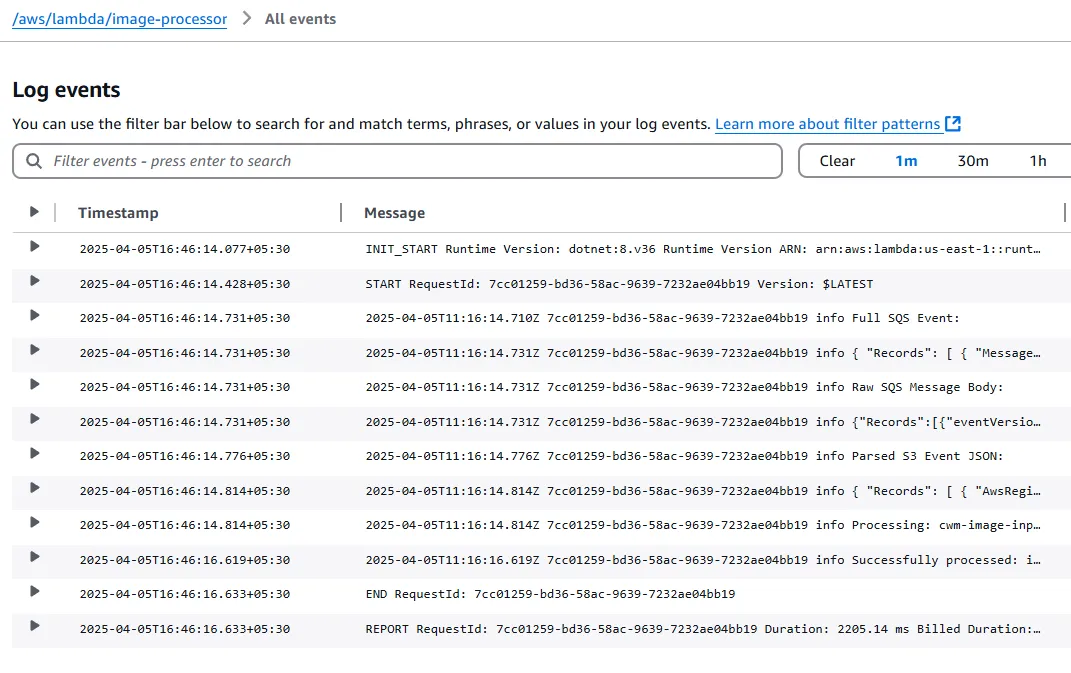

And as you can see below, here are the cloudwatch logs of our image processor Lambda.

The entire SQS Event is logged, along with the S3 Event. This helps you while debugging. This is by far the best way to debug and work with cloud appications.

Also, you can see that the processing has successfully completed in less than a second.

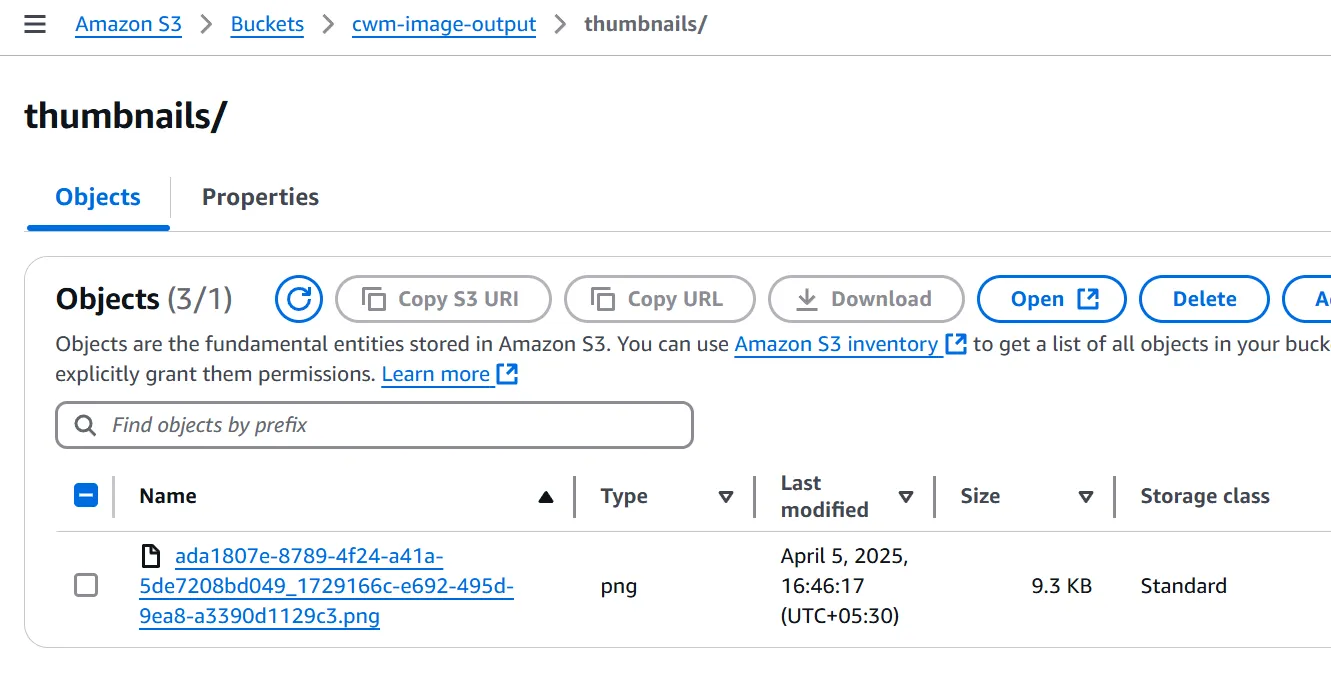

Let’s check out Output S3 Bucket now.

And there you go. The thumbnail generated is just under 10kb! Perfect for compression and thumbnail generation.

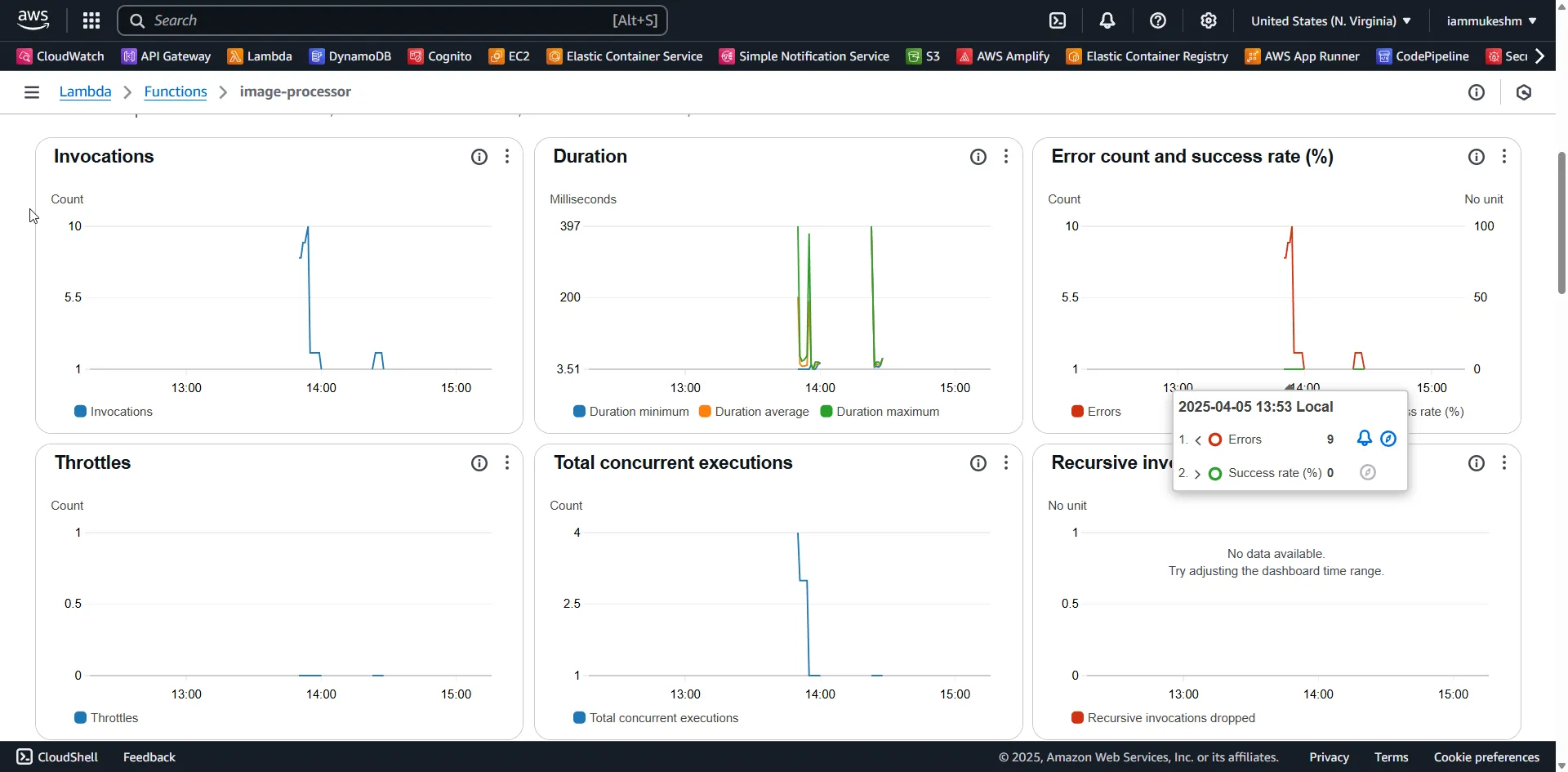

Under the Monitoring tab of the Lambda, you will get a better understanding of the performance of your newly built serverless beast! It shows the number of times the Lambda is invoked (by s3 trigger), the duration each request took on average, the error rates (the first few times I got some errors because of a wrong deserialization code) and much more.

Optimizations and Future Improvements

While the current implementation is functional and scalable, there are several areas where it can be optimized further for performance, cost-efficiency, and production readiness.

Offload Validation to a Pre-Queue Lambda

Instead of handling file validation at the API level, introduce a lightweight Lambda triggered directly by the S3 upload event. This function can validate MIME types, file sizes, or file naming conventions before forwarding valid events to the SQS queue. It ensures that only clean, valid inputs enter the processing pipeline and improves security by enforcing consistent standards at the entry point.

Metadata Indexing with DynamoDB

After generating thumbnails, you can store structured metadata—such as original object key, processed timestamp, dimensions, and S3 paths—in DynamoDB. This enables quick lookups, audit trails, and the ability to associate processed images with users or other entities in your system.

Multi-Region Readiness

To serve a global user base more effectively, replicate your input S3 bucket across regions using S3 Cross-Region Replication. Combine this with region-specific Lambda functions for lower latency and improved fault tolerance. It also adds resilience in case of regional failures.

Duplicate File Detection with Hashing

Implement SHA-256 or MD5 hashing of uploaded images to detect and skip duplicate files, even if the filenames differ. Store these hashes as object metadata or in DynamoDB. This saves processing time, reduces storage duplication, and can be critical in content moderation workflows.

Retry Logic for S3 Failures

Transient network issues or throttling can cause S3 operations to fail. Wrap S3 read/write calls in retry logic using exponential backoff. This simple addition significantly increases reliability without major architectural changes.

EventBridge for Post-Processing Workflows

Once an image has been successfully processed, emit a custom event to Amazon EventBridge. This allows other microservices—such as notifications, analytics, or tagging engines—to react to the event without tightly coupling them to your processing Lambda.

Smarter Compression and Format Conversion

Optimize output size further by stripping metadata, adjusting JPEG quality levels, or converting thumbnails to WebP. These optimizations reduce both storage costs and image delivery latency, especially in content-heavy or mobile-first applications.

Move to ECS/Fargate for Heavy Processing

If your processing logic grows in complexity or resource demand, consider shifting from Lambda to a containerized solution using ECS or Fargate. This provides more control over runtime, memory, and CPU allocation while keeping the event-driven nature of the workflow intact.

CloudFront Integration for Image Delivery

Placing CloudFront in front of the output S3 bucket enables edge caching, reduces latency, and lowers S3 read costs. You can also apply signed URLs for access control and integrate with your CDN strategy seamlessly.

CloudWatch Monitoring and Alerts

Set up detailed CloudWatch dashboards to track metrics like processing duration, error counts, DLQ depth, and Lambda invocation rates. Combine these with alarms and SNS notifications to proactively detect issues and respond before they impact users.

Multi-Tenant or User-Aware File Structure

If you’re building a system for multiple users or organizations, structure your S3 keys to include a tenant or user identifier (e.g., uploads/{userId}/image.jpg). This makes it easier to manage access control, billing, and cleanup.

Presigned URL-Based Uploads

Shift from direct API-based uploads to presigned URL uploads. This offloads file transfer directly to S3, reduces backend load, and gives you granular, time-bound control over who can upload and when.

Virus Scanning on Upload

For public-facing upload workflows, consider integrating virus scanning before triggering downstream processing. You can implement this in a Lambda or Fargate task using tools like ClamAV to ensure malicious files don’t enter your pipeline.

Summary

In this article, we built a fully serverless, scalable image processing pipeline on AWS using .NET. By leveraging services like Amazon S3, SQS, and Lambda, we created an event-driven architecture where image uploads automatically trigger thumbnail generation with no servers to manage.

We used a minimal .NET 9 Web API to upload images directly to S3, which then pushes events to an SQS queue. A Lambda function, written in .NET and powered by ImageSharp, listens to that queue, processes the images in real time, and uploads resized thumbnails to an output bucket.

Along the way, we covered essential concepts like event source mapping, error handling, DLQ integration, and efficient in-memory processing using streams. Logging the full SQS and S3 events made debugging and development easier, and we validated the results through S3 output and Lambda monitoring metrics.

Finally, we explored a wide range of improvements—from validation, deduplication, and metadata indexing to compression, multi-size generation, and operational best practices—giving you a clear path to production-grade image handling in the cloud.

If you found this useful, make sure to share it with your network—your fellow developers, architects, or anyone building media-heavy apps will thank you. This kind of architecture is lightweight, scalable, and production-ready—and it’s all powered by .NET on AWS.